Qwen3.5 Local Deployment

Deploy Qwen3.5 397B locally with Unsloth Dynamic 2.0 quantization. Run on Mac or PC with llama.cpp, SGLang, MLX, and OpenAI compatible API.

“AI Disruption” Publication 8800 Subscriptions 20% Discount Offer Link.

The previous article covered Qwen3.5’s overall introduction, architectural innovations, and benchmark comparisons. This one gets more practical — how to actually run it locally.

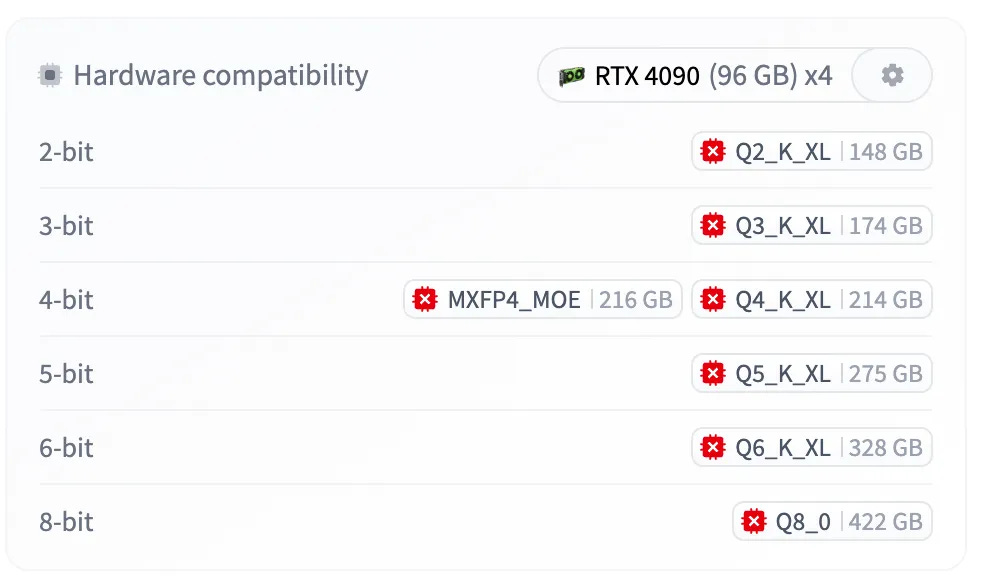

A 397B-parameter model, even with only 17B activated, has a full model size of 807GB. That sounds intimidating, but in practice, thanks to Unsloth’s Dynamic 2.0 quantization technology, a Mac with 192GB of memory can run the 3-bit version, and a Mac with 256GB can run the 4-bit version.

Unsloth Dynamic 2.0 Quantization

Unsloth was actually the first to release GGUF format files for Qwen3.5-397B-A17B (Qwen gave Unsloth day-zero access), and they used their own Dynamic 2.0 quantization strategy.

Critical layers are automatically promoted to 8-bit or even 16-bit precision, while less important layers use lower precision. This means that even at an overall 4-bit quantization, the model’s reasoning capability doesn’t fall apart.