Zhipu’s New Model Also Uses DeepSeek’s MLA, Runs on Apple M5

Zhipu IPO follow-up: free 30B MoE GLM-4.7-Flash, 3B active, MLA power, M5 local 43 tok/s

“AI Disruption” Publication 8600 Subscriptions 20% Discount Offer Link.

Zhipu AI Launches New Achievement After IPO.

Zhipu AI has released a new open-source lightweight large language model, GLM-4.7-Flash, which directly replaces the previous generation GLM-4.5-Flash, with API access freely available.

This is a Mixture of Experts (MoE) architecture model with 30B total parameters and only 3B activated parameters. The official positioning for it is “local programming and intelligent agent assistant.”

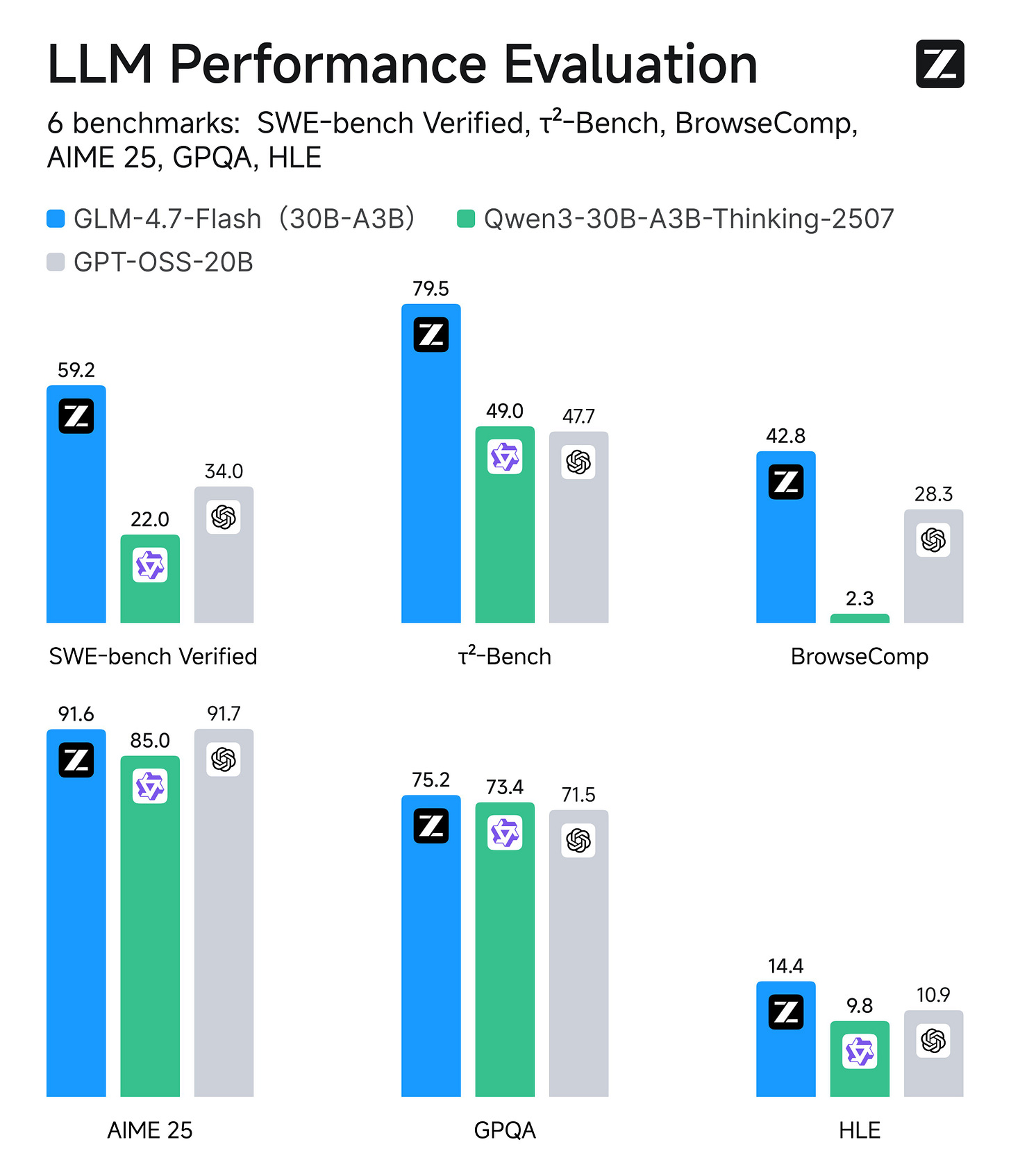

In the SWE-bench Verified code fixing test, GLM-4.7-Flash achieved a score of 59.2, and also significantly outperformed models of similar scale, such as Qwen3-30B and GPT-OSS-20B in evaluations including “humanity’s last exam.”