Why Speed Alone Will Break Your AI

Slowing down is not resistance, it is resilience.

"AI Disruption" Publication 7500 Subscriptions 20% Discount Offer Link.

When Microsoft rushed out its first AI chatbot (Tay) back in 2016, it went viral for all the wrong reasons: offensive outputs, inconsistency, and overnight brand damage. It was fast, but brittle. That same pattern repeats across the industry: teams prioritise speed, ship early, and then discover their systems cannot hold up under real-world use.

TL;DR

Everyone is chasing speed with AI. But fast adoption without reflection builds shallow systems that collapse under pressure. Slowing down creates trust, resilience, and long-term leverage.

The Illusion of Acceleration

Speed without reflection is where most systems fail. This piece is for anyone building, deploying, or experimenting with LLMs who wants their work to last. It offers a way of thinking about why slowing down is not a brake but a multiplier, helping you build trust, avoid fragility, and design systems that hold up under pressure.

I’m Sam Illingworth, a UK-based Professor working on how we use LLMs and AI tools differently. My focus is on encouraging people to slow down how they use AI and treat it as a tool for reflection, depth, and resilience. In this guest article I’ll explore why speed on its own is fragile and how pausing can give both individuals and organisations a real advantage.

Most AI coverage celebrates acceleration. Models are measured by speed, companies pitch products on time saved, and users are trained to expect instant results. This creates the sense that the future belongs to those who move fastest. But acceleration is not the same as progress. A system can be quick and still be brittle, efficient in the short term but hollow when exposed to real conditions.

We have seen this in practice: tools that hallucinate legal citations at scale, customer-facing bots that break under messy queries, and enterprise rollouts that stall as infrastructure costs spiral. These failures are not technical mysteries; they are the cost of moving too fast without reflection.

Research also backs this up: Francesca Gino, Gary Pisano, and colleagues at Harvard Business School found that people who paused to reflect on their work performed up to 25% better than those who simply kept going. Reflection, even when it seems slower, compounds into deeper competence and stronger results. The same principle applies to AI adoption. Without deliberate pauses, teams risk building fragile systems that appear fast but collapse under pressure.

Reflection as Leverage

Slowing down is not about rejecting AI but about using it differently. Reflection turns speed into leverage. For example, when you receive a model’s output, the default impulse is to accept or discard it immediately. But pausing to ask the model to surface its assumptions or admit when it doesn’t know the answer, shifts the exchange from shallow to deep.

To try this yourself, before you accept an output, pause and ask:

What assumptions is the model making about my request? (ASSUMPTIONS)

What alternative answers might exist? (ALTERNATIVES)

What reasoning steps should be made visible? (TRANSPARENCY)

This slows the process, but the benefit is cumulative. Over time, you are not just collecting outputs, you are training yourself and your system to think with greater clarity. Reflection makes hidden reasoning visible, uncovers edge cases, and builds resilience into the workflow. It compounds like interest.

“Slowing down is not about rejecting AI but about using it differently.”

Reflection also builds trust. Trust does not come from a fast response, but from consistent and transparent reasoning. A user who sees a system surface its own assumptions is more likely to believe in its outputs. Slowing down enough to explain, test, and verify creates depth that speed alone cannot. As Thomas Mitchell argues, transparency is not only about exposing inner workings but about making reasoning practices visible in ways that support justified belief. The kind of transparency that matters most is not every step of a process, but evidence of reliability over time. Showing how a system has performed before, or the grounds on which its outputs rest, offers a foundation for confidence without removing the possibility of trust.

This distinction has practical implications for design. A learning tool, for example, does not need to reveal every calculation to its users. Instead, it can share summaries of its performance, highlight assumptions in plain language, and explain limits where they exist. In this way, type transparency helps students or educators to place reasonable trust in its guidance, while token transparency at the level of each output would risk overwhelming them and undermining the willingness to trust. What matters in practice is building a rhythm of reflection that makes reasoning habits visible and dependable.

The Cost of Ignoring Pauses

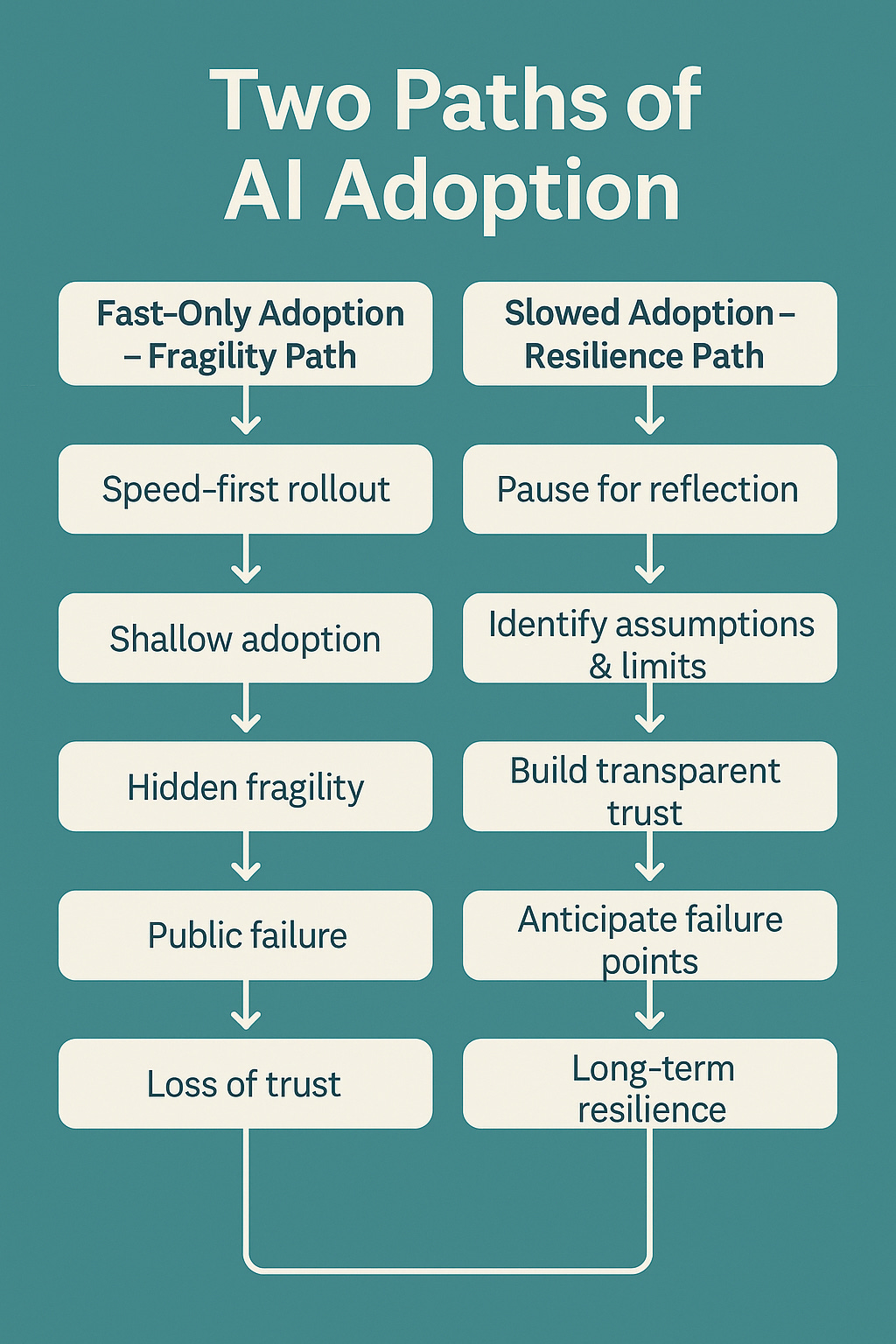

Organisations that move too quickly often discover that early gains vanish under real conditions. Infrastructure costs spiral as rushed systems need rebuilding, users churn when outputs feel inconsistent, and entire projects stall under technical debt. A product shipped a month early can end up costing a year in lost trust and recovery.

By contrast, deliberate slowing creates space for failure before it becomes public. Stress testing with messy inputs, adding reflective checkpoints in workflows, and anticipating potential breakdowns prevents later crises. These practices may seem like delays, but they are investments in resilience.

Before you deploy a system, pause and ask:

If it failed tomorrow, what would be the likely causes? (FAILURE POINTS)

What guardrails are missing? (RESILIENCE)

Where do we need to test with messy, real inputs? (STRESS TEST)

The act of slowing down to imagine failure helps surface hidden fragilities and shifts attention from firefighting to foresight.

Slowing down also forces teams to confront trade-offs. It reveals where infrastructure is fragile, where assumptions do not hold, and where features are missing. Shallow adoption looks fast but breeds fragility. Deep adoption looks slower, but the resilience it creates is what compounds over time.

The Human Edge

The future of AI will not belong to the fastest adopters but to those who take the time to design for endurance. Systems that rush to market without trust or usability may capture early attention but they will not last. The real edge lies with teams and individuals who pause, reflect, and build for long-term use.

“These checkpoints are not delays. They are the foundations of systems that endure when the hype cycle moves on.”

Pausing is not a weakness. It is how humans add value in an AI-saturated environment. While machines accelerate, humans can slow down, notice gaps, and make choices about what truly matters. This is where competitive advantage will be found: in the ability to resist the lure of speed for its own sake and to instead focus on depth, reflection, and resilience.

Moving Forward: Your Next Steps

Before you roll out your next model or workflow, pause and ask:

What assumptions is this system making? (ASSUMPTIONS)

Where is it most likely to break? (FAILURE POINTS)

How will we build trust over time? (TRANSPARENCY)

These checkpoints are not delays. They are the foundations of systems that endure when the hype cycle moves on.

Short Bio

Sam Illingworth is a Professor based in Edinburgh, exploring how large language models can be used for reflection and resilience rather than speed alone. He writes Slow AI, a newsletter offering weekly creative prompts for mindful and ethical use of AI.