Transformers v5: Five Years in the Making

Transformers v5 lands: 5 years, 1.2B installs, PyTorch-only, faster training & quantized inference.

“AI Disruption” Publication 8400 Subscriptions 20% Discount Offer Link.

Recently, Transformers v5 released its first RC (Release Candidate) version, v5.0.0rc0.

This update marks a significant milestone for the world’s most popular AI infrastructure library, officially completing a five-year technical cycle from v4 to v5.

As Hugging Face’s most core open-source project, since the release of v4 in November 2020, Transformers’ daily downloads have surged from 20,000 to over 3 million, with total installations exceeding 1.2 billion.

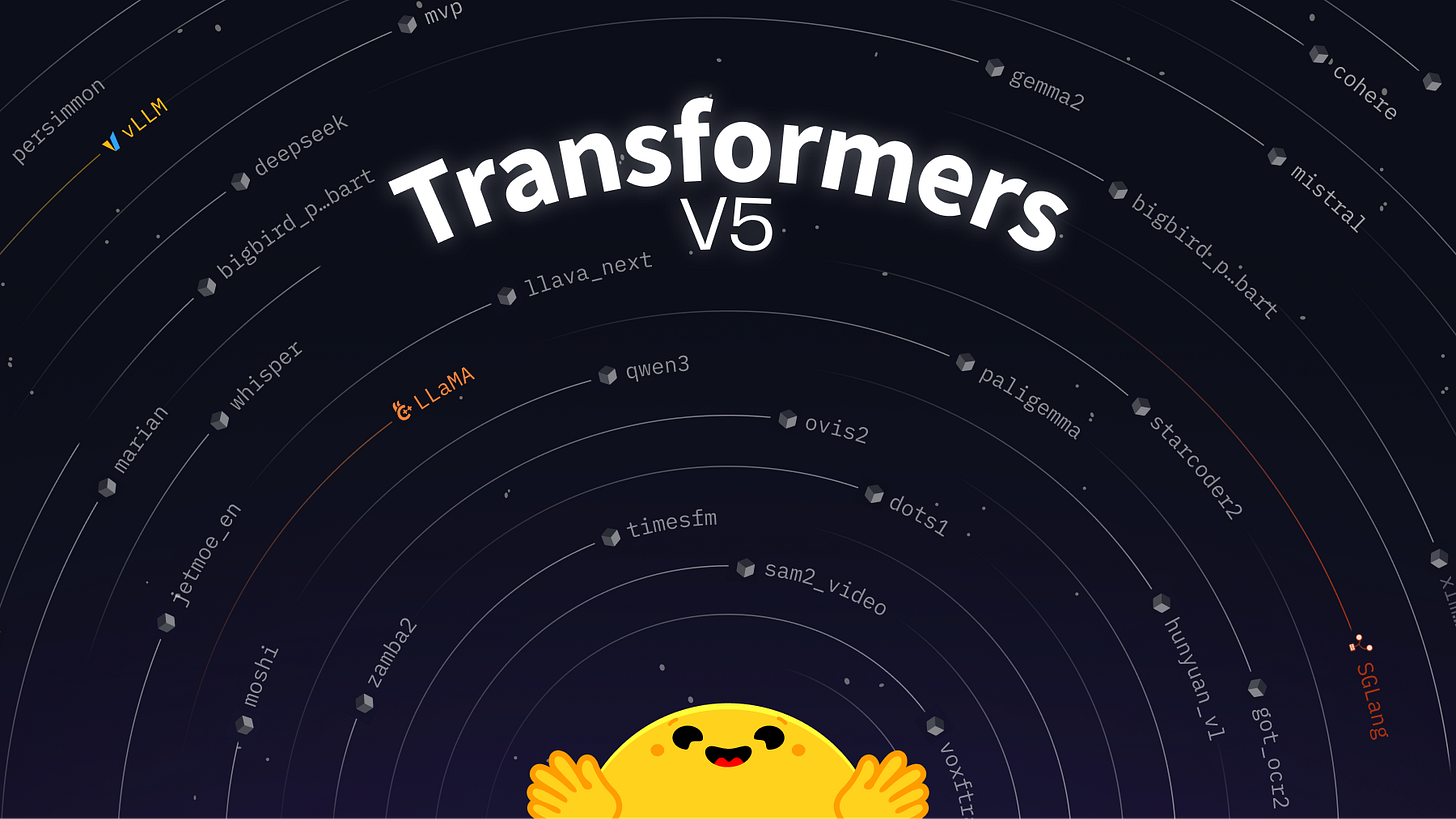

It has defined how the industry uses models, with supported architectures expanding from the initial 40 to over 400, covering text, vision, audio, and multimodal domains. Community-contributed model weights now exceed 750,000, spanning text, vision, audio, and multimodal fields.

The official statement emphasizes that in the field of artificial intelligence, “reinvention” is key to maintaining long-term relevance. As the leading model definition library in the ecosystem, Transformers needs to continuously evolve and adjust its structure to maintain its relevance.

Version v5 establishes PyTorch as the sole core backend and focuses on evolution across four major dimensions: ultimate simplicity, transitioning from fine-tuning to pre-training, interoperability with high-performance inference engines, and elevating quantization to a core feature.