The Principles of Transformer Technology: The Foundation of Large Model Architectures (Part 2)

Explore the Transformer's decoder architecture, self-attention, and Encoder-Decoder Attention. Learn key advantages like parallel processing, capturing long dependencies, and scalability.

Welcome to the "Practical Application of AI Large Language Model Systems" Series

In the last lesson, we discussed the data processing logic of each layer in the encoder. This time, we will focus on the decoder.

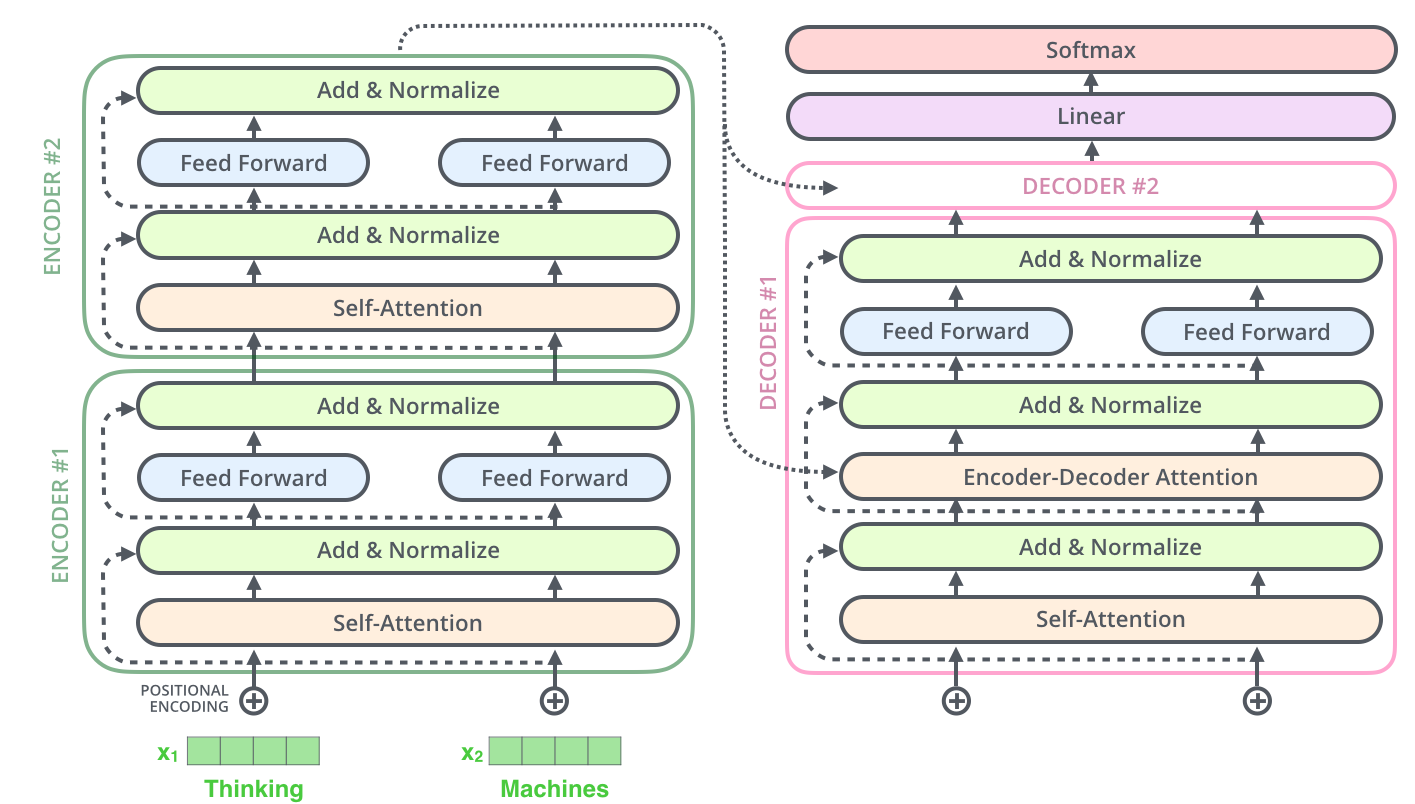

Let's start with a more detailed architecture diagram. The decoder includes an additional layer: the Encoder-Decoder Attention layer. We will look at this in sequence.