The Disruption of the Music Industry by AI: A Peek into the Future

A data-driven analysis of popular Generative AI Music Models

Hi Readers! 👋

I came across Hugo Acosta on Substack, He specializes in transforming data into engaging and informative stories.

Hugo Acosta is passionate about exploring the impact of emerging technologies and pop culture, presenting these insights through compelling visuals and data-driven narratives.

Hugo Acosta's work combines creativity and data analysis to foster meaningful conversations and inspire forward-thinking perspectives.

Below is a guest article by Hugo Acosta.

The music industry is no stranger to disruption. Over the years, this industry has experienced dramatic changes, from the rise of digital streaming platforms in recent years to the shift from physical to digital music sales at the beginning of the 20th century. Today, we stand on the brink of a new revolution: Artificial Intelligence in music creation. This shift has the potential to radically transform how music is made, consumed, and even valued. I think the question is not about how but when we will see the biggest disruption in the field of AI in music.

In this post, we’ll explore the possible impacts of AI on the music industry and uncover trends through data-driven insights on some popular Generative AI music models. But before we dive into the data, let's look at the bigger picture.

A Booming Industry with a Track of Constant Change

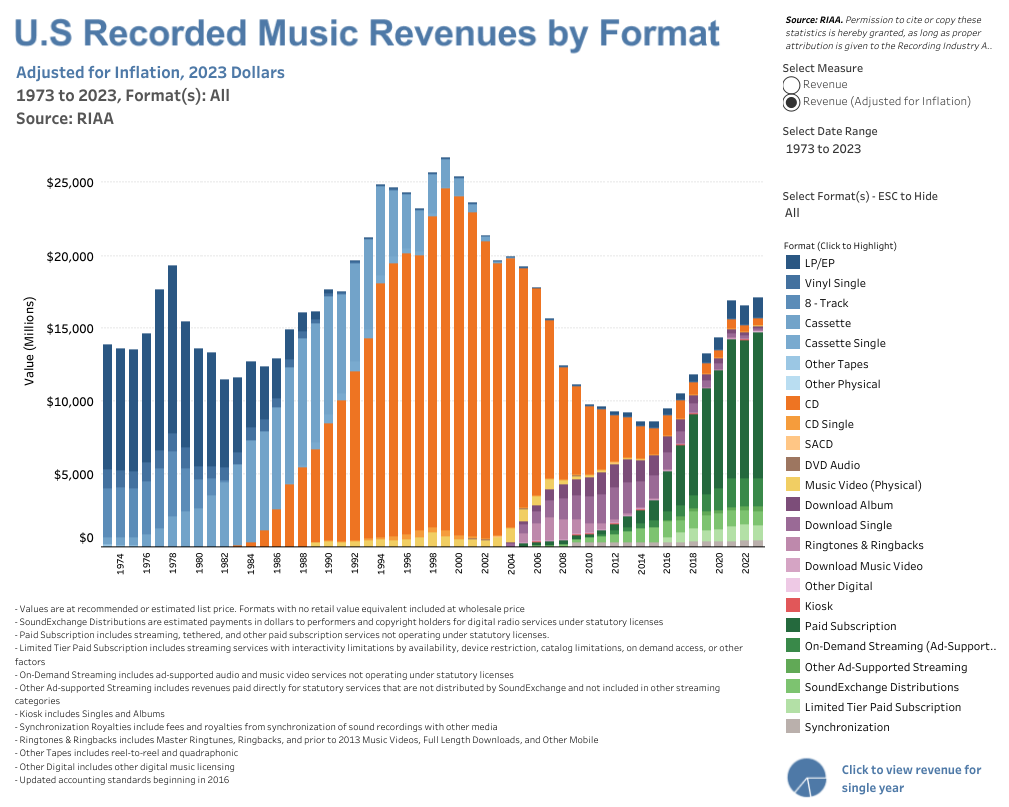

The global music industry has seen steady growth over the years, driven by different means of adoption. The pattern over the years is very clear, in general terms, regardless of the medium (8-Tracks, Vinyl, CDs, Digital Downloads, etc.) the music industry consistently experiences a steady growth in revenue before a dramatic drop, often due to the adoption of a new medium to consume music, as noted in the chart below.

As you can see from the graph, the music industry has been defined by 4 different eras (at least since the 70s):

The LP/Vinyl era (1970’s)

The Cassette era (1980’s - early 1990’s)

The CD era (1990’s - early 2010’s)

The Streaming era (currently)

Given the cyclical nature that data seems to corroborate, it’s only natural that we should be seeing a new player emerge in the near future: Generative AI music.

Ok, so we’ve talked about the different eras that recorded music has experienced in the last 50 years, but how about the potential economic impact AI-generated music can have on this industry? The wonderful infographic by Visual Capitalist below helps us understand and quantify the disruption AI-generated music can have: simply put, it’s billions of dollars.

The total cumulative revenue of the music industry over the last 50 years has been $771 Billion dollars (adjusted for inflation), with streaming revenue accounting for $13B of the total $17.1B accounted for 2023, and undoubtedly these figures will continue to rise.

AI’s Role in Music Creation: The Changing Landscape

AI-driven tools are already being used to create music, generate melodies, and assist artists with production. Generative models like Suno, Udio, MusicGen, AudioLDM, and others are providing new ways to create sounds, generate pieces in multiple genres, and produce music in record time. These models have assisted the democratization of non-artist music generation and I anticipate that the growing reliance on these AI models is likely to disrupt traditional workflows in music creation.

But what exactly does the AI landscape look like when we dig deeper into user preferences and the kinds of music these models generate? Let’s take a look at the data and try to find out.

Exploring AI Model Generation Samples and User Preferences

To provide a clearer picture of how AI can disrupt the music industry, I analyzed the following:

Top 10 Most Used Tags: AI models are producing music across various genres, but some genres are more frequently generated than others. This provides insight into which genres and artists are potentially more at risk of being disrupted by AI.

Model Preference Distribution: By comparing which AI models users preferred, I was able to reveal which models are more successful at meeting user expectations and producing quality results. The data shows a clear preference for certain models over others. This data can be used to leverage one model over the other for existing and upcoming artists

Tag Frequencies by Preferred Model: Better-performing models consistently produce music associated with specific genres. This can give us a sense of which AI tools are better suited to particular musical styles and user preferences.

Top 10 Most Used Tags

Using data from Hugging Face I evaluated what music genre is most likely to be produced by different state-of-the-art Generative AI Music Models. The models used to generate this dataset are as follows:

AudioLDM 2 Large

AudioLDM 2 Music

Mustango

MusicGen Medium

MusicGen Large

Riffusion

Stable Audio v1

Stable Audio v2

MTG-Jamendo*

Udio

Suno v3

Suno v3.5

*Please note that MTG-Jamendo comes from a repository for music auto-tagging of music hosted in Jamendo, a popular music service for indie artists to upload their work. In other words, think of this dataset as a baseline of user-generated music versus AI-generated music.

As you can see from the chart below, electronic, pop, and soundtrack music are the most popular tags these models generated.

The data suggests that EDM artists, pop artists, and movie productions are the most likely to be disrupted by AI, at least in the near future.

Model Preference Distribution

The next analysis I conducted was generated using data from the accompanying dataset that was used to make the plot above, this dataset consists of 15,600 pairwise audio comparisons rated by humans regarding their preference.

As noted in the chart above, we can easily identify the best performing models. One interesting takeaway is that the top 2 spots are shared by the same model, albeit at different versions. This seems to suggest that the Suno AI model is the best overall model by far and poses the biggest threat to disrupt the music industry. Additionally, the MTG-Jamendo dataset performed really well, taking the 4th (technically 3rd) spot, which should provide a bit of peace to those particularly fearful of the near term of AI-generated music taking over human-generated music. One thing is clear however, the gap is certainly shortening.

Tag Frequencies by Preferred Model

The last analysis I performed was analyzing the top tag frequencies by preferred mode in order to attempt identifying if a model seemed to excel at a particular genre over another. The following can be observed by the heatmap below:

The Suno models over perform in general terms regardless of the genre of music they produce.

I found it interesting that jazz and folk pieces don’t have a clear winner, perhaps this may be due to the fact they are less mainstream genres.

What Does This Mean for the Future?

The key takeaway from these analyses is that AI is not just an emerging tool in the music industry. As evidenced by the data models such as Sudo are quickly becoming a great tool of creative exploration for non-artists (and perhaps existing artists). As AI technology continues to improve, we can expect an increasing reliance on these tools, especially in areas like EDM music production, soundtracks, and ambient music.

However, the rise of AI-generated music presents a significant copyright challenge. AI models are trained on vast datasets, often without explicit consent from the original creators. This creates a legal gray area where both musicians and developers of AI tools face uncertainty. With the lack of comprehensive regulation, there is a pressing need for the music industry to establish clear frameworks for copyright, attribution, licensing, and revenue distribution in the age of AI.

Conclusion

The integration of AI and disruption to the music industry is inevitable. Generative models and tools such as Suno, MusicGen, and AudioLDM can empower non-musicians to explore their creativity as well as provide existing professionals with innovative production tools that have the potential to disrupt a multi-billion dollar industry. In short, AI has opened the doors to a new era of music creation that corresponds to the cyclical nature of formats the music industry has experienced over the last 50 years.

However, as the data seems to suggest, the disruption won’t affect the industry equally. Genres like electronic and soundtrack music, may experience the most immediate changes. At the same time, the growing preference for certain AI models highlights some potential new giants in the music industry.

Nonetheless, these opportunities come hand-in-hand with challenges. The current lack of regulation around how AI models are trained and used raises significant questions about copyright, attribution, and fair compensation to artists. As AI narrows the gap between human and machine-generated music, these unresolved issues could create a “copyright nightmare” that threatens the very ecosystem it aims to enhance.

The path forward lies in thoughtful collaboration. Musicians, record labels, policymakers, and even consumers must work together to navigate these challenges and shape a future where AI’s primary purpose should be to preserve and enhance artistic integrity. By addressing these concerns from the get-go, we can ensure that the disruption of music remains as inspiring as the art of making music itself.

What are your thoughts on AI’s role in shaping the music industry? Have you used any of the models covered in this analysis? Share your insights in the comments below, and let’s start a conversation about the future of music creation. Also please consider subscribing to continue exploring more data-driven stories together!

Thanks for reading! ✌️

If you’re curious to see the stories data can tell—whether it’s about sports, music, videogames, movies, etc.—hit subscribe! 📩 Join the visualytics community to get fresh insights delivered straight to your inbox. Your support helps me bring more visual stories to life! 🚀

I appreciate the extensive research and data trawling that went into this. It does seem inevitable that tools like Suno will have a dramatic effect on the future of the music industry. Unfortunately, I am not hopeful that it will be about "thoughtful collaboration" as much as it will be about dehumanizing and devaluing human-made music until music is no longer a source of motivation or inspiration and has had the soul sucked right out of it. I just published something about this, triggered by some comments made by the Suno CEO: https://deeplywrong.substack.com/p/music-is-too-difficult-for-techbros

Great stuff, I definitely learned a few things!

When you say AI is likely to disrupt traditional workflows in music creation, is that a bad thing? Music creation has already become a highly technical and collaborative process, a far cry from the traditional singer-with-a-guitar. The way I see it, AI is just the next machine in the room, offering new possibilities and tools for artists to explore.