RAG Relegated: Just-in-Time Context Scarcity Verified

From RAG to JIT: how agents slash token waste with active, on-demand context.

“AI Disruption” Publication 8400 Subscriptions 20% Discount Offer Link.

This article combines recent blog readings and experiences using Agent products to discuss a key paradigm shift in context engineering: from LLMs passively receiving context to Agents actively acquiring context (Just-in-Time Context).

The content mainly includes:

Recall: Revisiting and expanding on the What & Why of Context Engineering

JIT Context: What is Just-in-Time Context (which I think could also be called Agentic Context), and why do we need it?

Effective JIT Context Engineering: The cost of JIT, and how to achieve efficient context management through Compress / Write / Isolate

Recall & Introduction

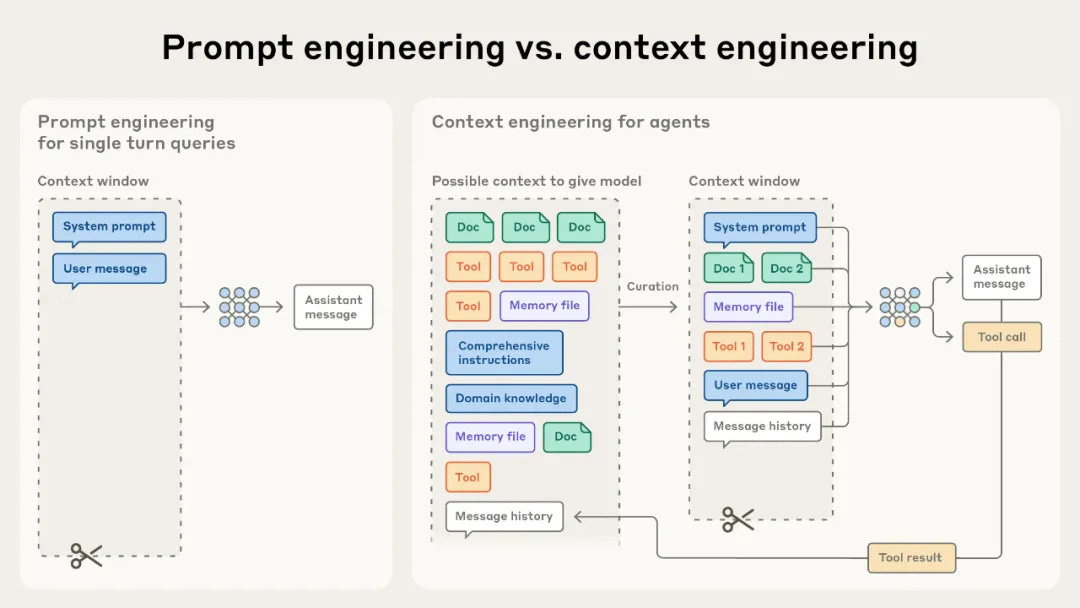

Shortly after I finished writing this blog post, a diagram from Anthropic helped everyone understand the distinctions more accurately (a picture is worth a thousand words):

To briefly review their definitions: Prompt engineering is the method of writing and organizing LLM instructions to obtain optimal inference results, while context engineering refers to dynamically planning and maintaining the optimal set of input tokens during LLM inference (the set includes any information that might enter the context).

Continuing the review of why context engineering is so important: in previous blog posts, two examples were used to demonstrate its importance. This article can supplement some more academic explanations. Research on context rot shows that as the number of tokens in the context window increases, the model’s ability to extract accurate information from that context correspondingly weakens (the “context degradation” section in the previous blog post also implicitly contained this conclusion). Although the performance degradation of some models is relatively gradual, this phenomenon is universally present across all models. Therefore, we need to view context as a limited resource with diminishing marginal returns (as in the previous blog post comparing the context window to memory in a computer system).

Anthropic mentioned in their blog: Just as human working memory capacity is limited, LLMs also possess what’s called an “attention budget“ that gets consumed when parsing long contexts, with each newly introduced token occupying a certain amount of this budget. This further highlights the importance of context engineering—dynamically planning and maintaining the optimal set of input tokens without wasting the attention budget. This scarcity of attention stems from the limitations of the Transformer architecture. Transformers allow each token to attend to all other tokens in the entire context, which means for N tokens, there will be N² pairwise interaction relationships.

As length increases, this exponential interaction demand inevitably leads to attention being “diluted.” Combined with the natural scarcity of long sequences in training data and the perceptual ambiguity brought by position encoding interpolation, model performance exhibits gradient-like degradation when processing long contexts—meaning that while it doesn’t collapse, precision in long-range retrieval and reasoning is significantly compromised. Agent architectures inevitably introduce long contexts (”In our data, agents typically use about 4× more tokens than chat interactions, and multi-agent systems use about 15× more tokens than chats.” —— Anthropic). This is also the fundamental significance of context engineering: combating this architectural diminishing marginal returns to build powerful Agentic systems.