QwenLong-L1-32B: RL for Long-Context Reasoning

QwenLong-L1-32B: 130K-token AI for finance, law & research. RL-powered long-context reasoning outperforms Claude-3.7 & GPT-4.

"AI Disruption" Publication 6600 Subscriptions 20% Discount Offer Link.

Context length reaches 130,000 tokens, suitable for comprehensive analysis of multi-segment documents, finance, law, scientific research, and other complex domain tasks.

Recent Large Reasoning Models (LRMs) have demonstrated powerful reasoning capabilities through Reinforcement Learning (RL), but these improvements are primarily reflected in short-context reasoning tasks. In contrast, how to extend LRMs through reinforcement learning to effectively process and reason with long-context inputs remains an unresolved critical challenge.

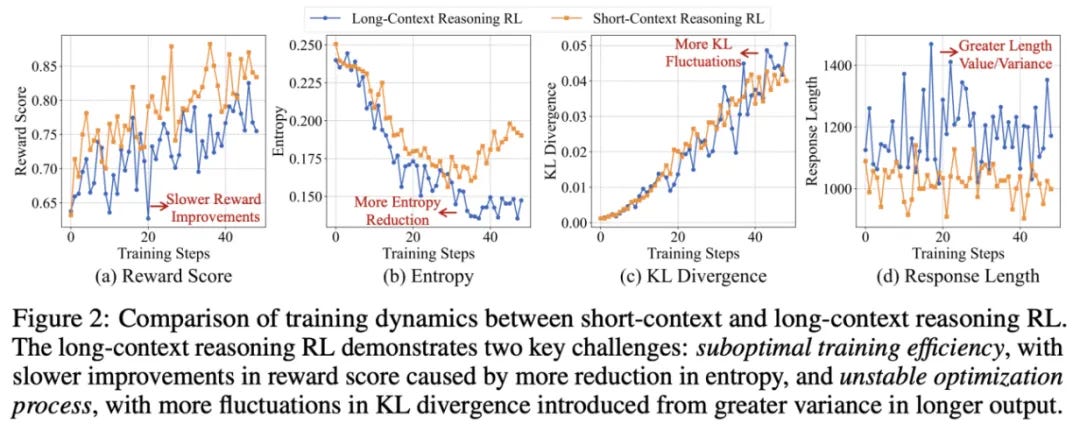

The team from Alibaba's Tongyi Laboratory first formally defines the long-context reasoning reinforcement learning paradigm and identifies two core challenges: suboptimal training efficiency and unstable optimization processes.

To address these issues, the team proposes the QwenLong-L1 long-context reasoning reinforcement learning framework, which gradually improves model performance on long-context reasoning tasks through progressive context extension strategies. Ultimately, on multiple long-document QA benchmarks, QwenLong-L1-32B performs exceptionally well, not only surpassing flagship models like OpenAI-o3-mini and Qwen3-235B-A22B, but also matching the performance of Claude-3.7-Sonnet-Thinking.