Qwen3 Embedding Models: 3 Sizes, Outperform APIs, SOTA Achieved

Qwen3-Embedding: 3 SOTA text models (0.6B-8B) for NLP & RAG. Beats APIs, supports 119 languages.

"AI Disruption" Publication 6800 Subscriptions 20% Discount Offer Link.

Qwen3 Unveils New Embedding Series at Midnight!

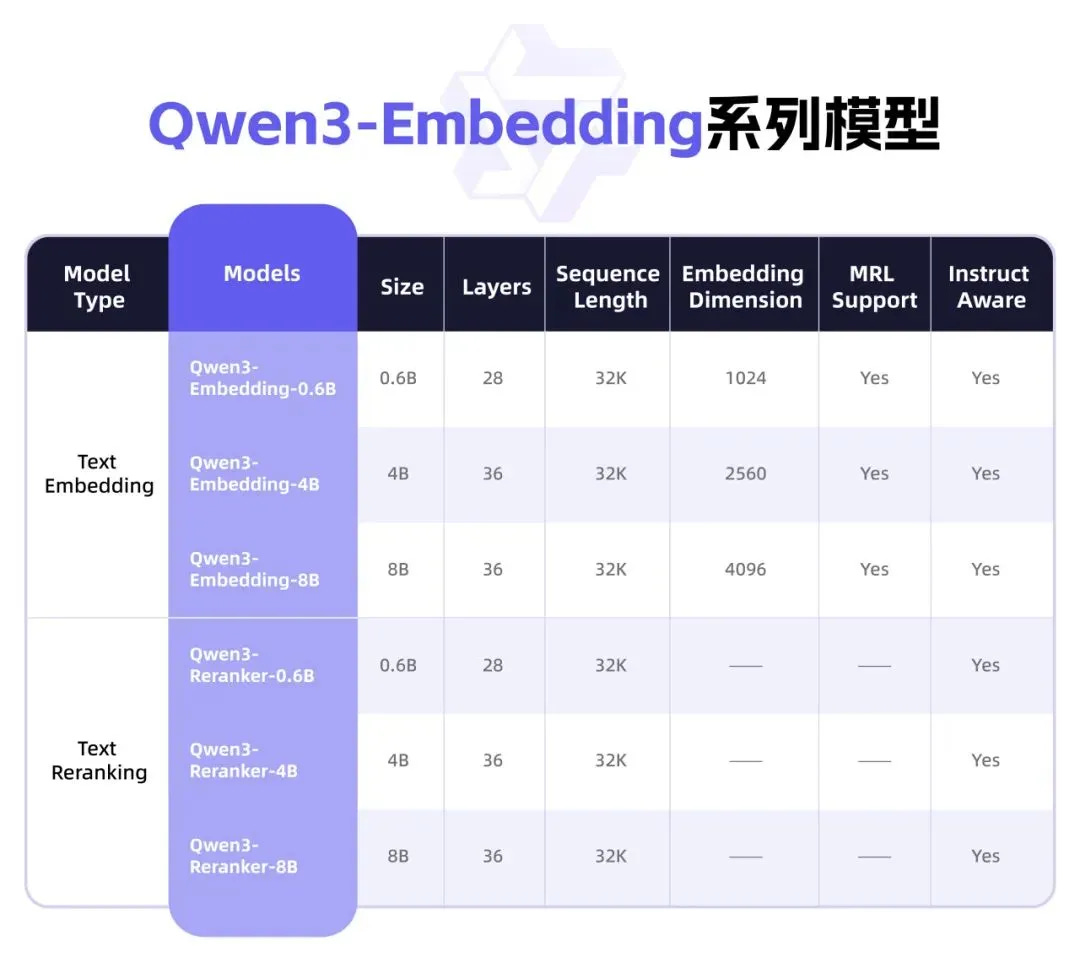

The Qwen3-Embedding series has officially launched, designed specifically for text representation, retrieval, and ranking tasks. It transforms text (such as sentences or paragraphs) into high-quality vector representations to enhance natural language processing in applications like semantic search, question-answering systems, and recommendation engines.

It can be used for tasks such as document retrieval, RAG, classification, sentiment analysis, and retrieval.

Built on the Qwen3 foundation model, it leverages Qwen3’s multilingual strengths.

Available in three sizes—0.6B, 4B, and 8B—the 8B version ranks first on the MTEB Multilingual Leaderboard, outperforming numerous commercial API services.

Some have speculated that the 0.6B version is tailored for mobile RAG applications, with excitement building for Apple’s WWDC.

This year, Alibaba confirmed its collaboration with Apple to provide services for Apple Intelligence in China.