Qwen Next-Gen Upgrade: 10x Faster & Cheaper on AIME Math

Qwen3-Next AI models: 32B size, 235B performance. 10x faster inference, open-sourced on Hugging Face.

"AI Disruption" Publication 7600 Subscriptions 20% Discount Offer Link.

32B Size Matches 235B Performance - Two New Models Now Open-Sourced

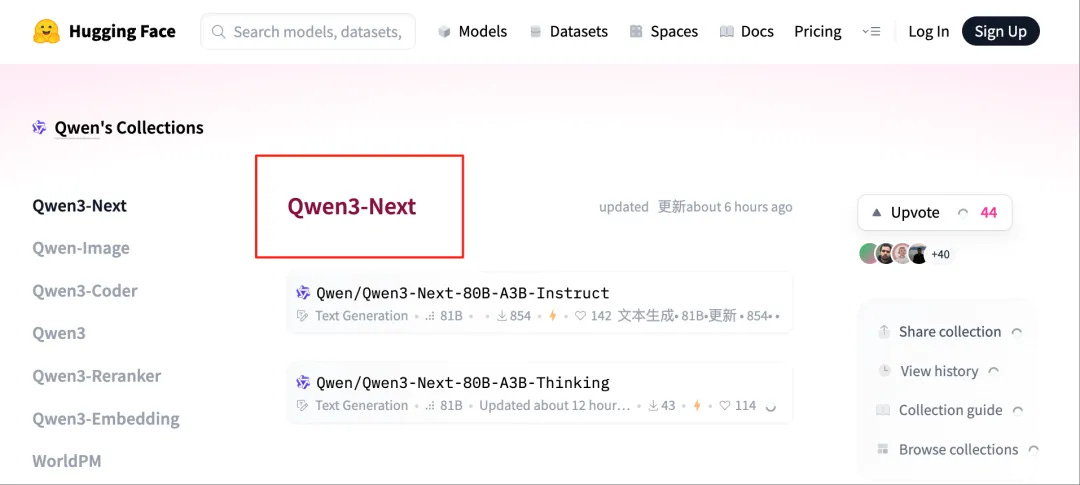

Today, Alibaba Tongyi Lab officially released the next-generation foundation model architecture Qwen3-Next and trained the Qwen3-Next-80B-A3B-Base model based on this architecture. This model has 80 billion parameters, with only 3 billion parameters activated.

The Base model was trained on a subset of Qwen3 pretraining data, containing 15T tokens of training data. It requires only 9.3% of the GPU computational resources of Qwen3-32B, and for contexts exceeding 32k, inference throughput can reach over 10 times that of Qwen3-32B.

Meanwhile, based on the Base model, Alibaba has open-sourced Qwen3-Next-80B-A3B's instruction model (Instruct) and thinking model (Thinking). The models support a native context length of 262,144 tokens, expandable to 1,010,000 tokens.