OpenAI Shifts Next-Gen Model Strategy: Has the Scaling Law Hit a Wall?

Is AI scaling hitting limits? OpenAI shifts focus to data efficiency and inference innovation, exploring new ways to push LLMs amid data and compute challenges.

Is the Scaling Law for Large Models Reaching Its Limits? OpenAI Shifts Strategy.

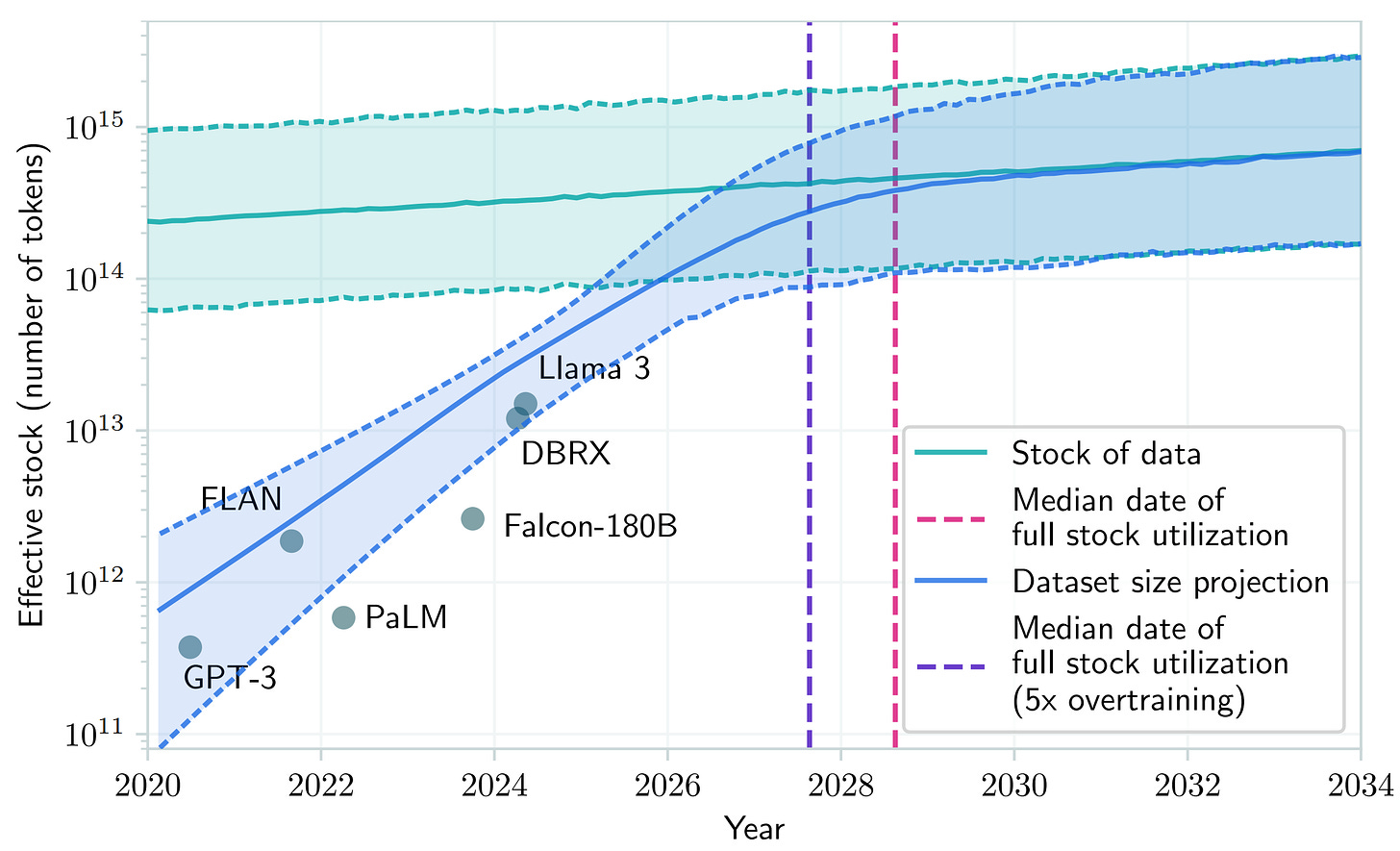

Research predicts that if LLMs continue on their current trajectory, all available data resources could be exhausted by around 2028. At that point, the development of large models based on big data might slow down or even come to a halt.

However, it seems we may not have to wait until 2028. Here are some controversial points:

The quality improvements in OpenAI’s next-generation flagship model are less significant than those seen between the previous two flagship models.

The AI industry is now focusing on enhancing models post-initial training.

OpenAI has formed a dedicated team to address the issue of training data scarcity.

OpenAI’s renowned research scientist, Noam Brown, has openly opposed this view. He stated that AI’s progress would not slow down in the near term. Additionally, he recently tweeted his support for OpenAI CEO Sam Altman’s comments on AGI, noting that the development will be faster than anticipated. Brown’s sentiments are echoed by most researchers at OpenAI.