Muon Optimizer: 48% Less Compute Than AdamW, Compatible with DeepSeek

Discover how Dark Side of the Moon's improved Muon optimizer cuts computational requirements by 48% over AdamW, scales to larger models, and is compatible with DeepSeek.

"AI Disruption" publication New Year 30% discount link.

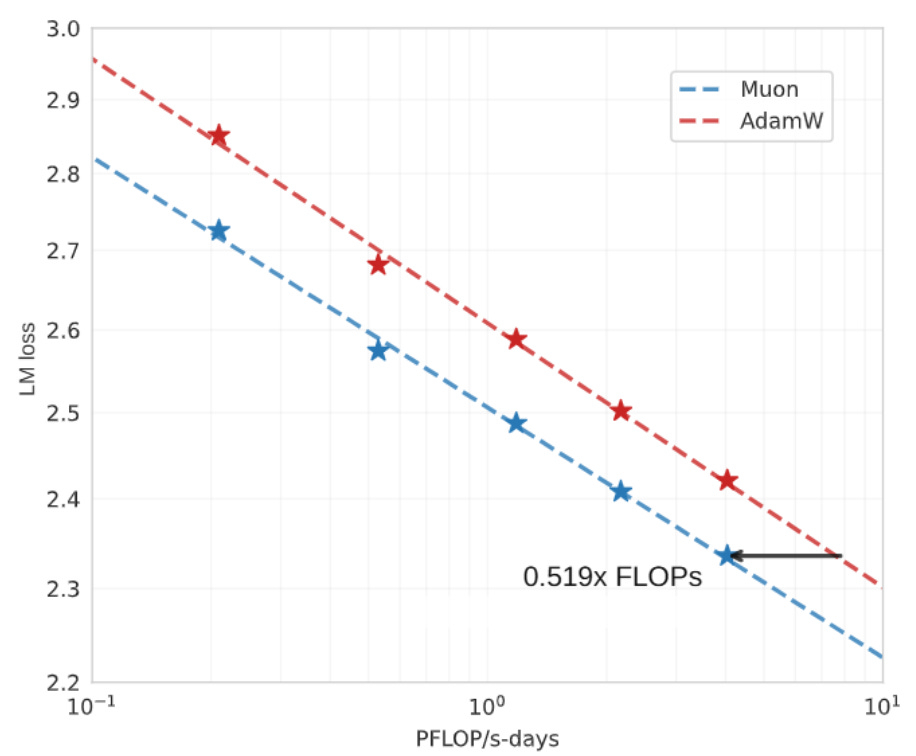

Computational Requirements Decreased by 48% Compared to AdamW: The training optimization algorithm Muon, proposed by OpenAI engineers, has been further advanced by the Dark Side of the Moon team!

The team discovered the Scaling Law of the Muon method, made improvements and proved that Muon is also applicable to larger models.

For different Llama architecture models with up to 1.5B parameters, the computational requirement of the improved Muon is only 52% of Adam W's.

Meanwhile, the team also trained a 16B MoE model based on the DeepSeek architecture, which has been open-sourced along with the improved optimization algorithm.