MiniMax M2.1 Tops AI Coding

MiniMax M2.1 crushes SWE-bench, delivers Rust/Go/React code that runs—ready for Cursor & Claude Code.

“AI Disruption” Publication 8400 Subscriptions 20% Discount Offer Link.

In the past two days, the core focus of China’s AI industry has undoubtedly been MiniMax.

On December 21st, MiniMax (Xiyou Technology) officially submitted its prospectus to the Hong Kong Stock Exchange, revealing a series of numbers that instantly ignited public discussion: over $1 billion in cash reserves, a 174.7% year-over-year revenue surge in the first nine months of 2025, and, while maintaining high-intensity R&D, adjusted net losses were controlled at $186 million.

Before the capital market buzz had even subsided, on the 23rd, MiniMax played another trump card: officially launching the MiniMax M2.1 model.

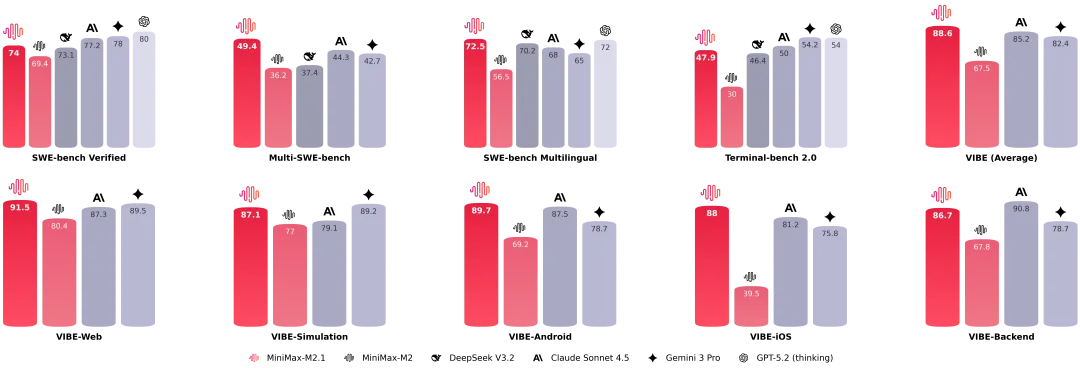

This was not a routine version iteration. According to official disclosures, M2.1 achieved SOTA status with a score of 72.5% on the SWE-bench Multilingual evaluation, surpassing both Gemini 3 Pro and Claude Sonnet 4.5.

More importantly, it is no longer limited to Python or frontend code generation, but has launched an offensive toward broader backend languages such as Rust, Java, and C++, attempting to solve the pain points of previous models that “write code that looks right but doesn’t run” and “lack engineering sense.”

At the same time, M2.1 has significantly strengthened its native Android and iOS development capabilities, promoting the slogan “Not only vibe WebDev, but also vibe AppDev.”

Moreover, to provide hardcore support for this “zero-to-one” full-stack capability, MiniMax has also built and open-sourced a new benchmark called VIBE (Visual & Interactive Benchmark for Execution in Application Development). Unlike traditional benchmarks, VIBE covers five core subsets: Web, simulation, Android, iOS, and backend, and introduces the innovative Agent-as-a-Verifier (AaaV) paradigm, which can automatically evaluate the interactive logic and visual aesthetics of generated applications in real runtime environments. In this ultimate “full-stack construction” test, M2.1 demonstrated exceptional capability with an average score of 88.6, not only significantly outperforming Claude Sonnet 4.5 in almost all subsets, but also approaching the level of Claude Opus 4.5.