LightLab: Google Pushes Cinematic Lighting Control to the Limit with Diffusion Models

Google's LightLab uses diffusion models for cinematic lighting control in images. Adjust intensity, color & add virtual lights—all from a single photo.

"AI Disruption" Publication 6400 Subscriptions 20% Discount Offer Link.

Recently, Google introduced a project called LightLab, which enables precise control over light and shadow in images.

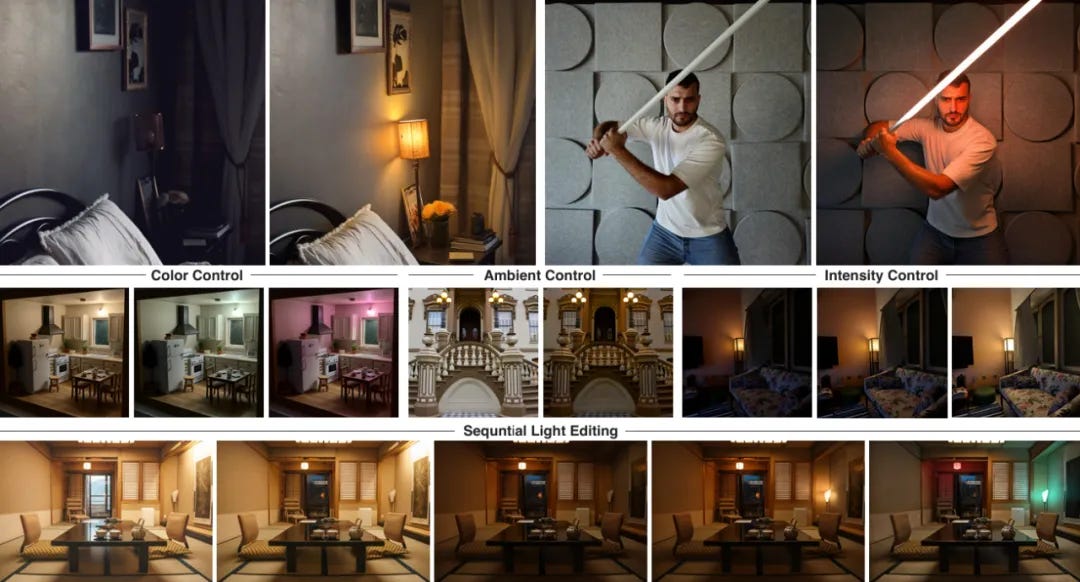

It allows users to achieve fine-grained parametric control of light sources from a single image, including adjusting the intensity and color of visible light sources, the intensity of ambient light, and inserting virtual light sources into a scene.

In image or film creation, light is the soul, determining the focus, depth, color, and even the mood of a scene.

In films, for instance, well-crafted lighting can subtly shape a character’s emotions, enhance the story’s atmosphere, guide the audience’s attention, and even reveal a character’s inner world.

However, whether in traditional photographic post-processing or adjustments after digital rendering, precisely controlling the direction, color, and intensity of light and shadow has always been a time-consuming, labor-intensive, and experience-dependent challenge.

Existing lighting editing techniques either require multiple photos to function (not applicable to single images) or, while capable of editing, lack precise control over specific changes (e.g., exact brightness or color adjustments).

Google’s research team fine-tuned a diffusion model on a specially constructed dataset, enabling it to learn how to precisely control lighting in images.

To build this training dataset, the team combined two sources: a small set of real-world photo pairs with controlled lighting variations and a large set of synthetically rendered images generated using a physically based renderer.