Kimi's open-source audio model tops multiple benchmarks

Kimi-Audio: Open-source SOTA audio model, #1 in 10+ benchmarks. Supports ASR, dialogue & more. GitHub link included!

"AI Disruption" Publication 6000 Subscriptions 30% Discount Offer Link.

The Hexagonal Warrior Has Arrived.

Today, Kimi released a new open-source project — a brand-new general-purpose audio foundation model, Kimi-Audio, which supports tasks such as speech recognition, audio understanding, audio-to-text transcription, and voice dialogue. It achieves state-of-the-art (SOTA) performance across more than ten audio benchmarks.

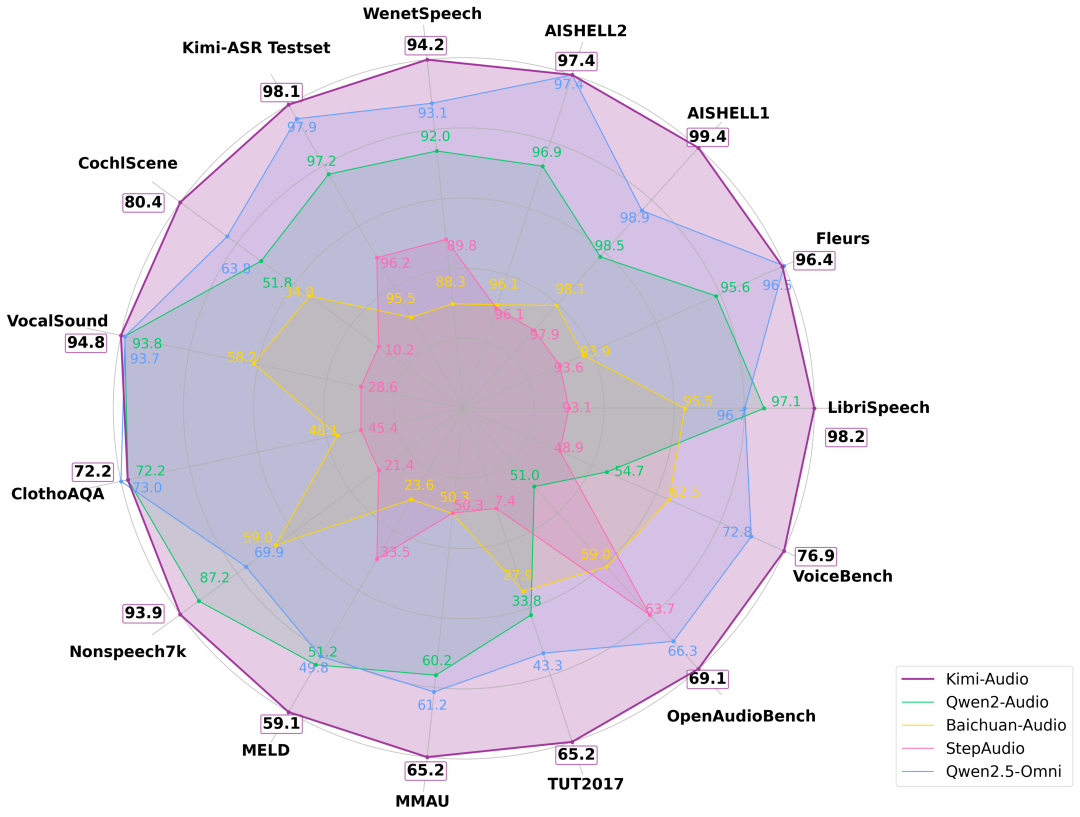

Results show that Kimi-Audio ranks first in overall performance, with almost no noticeable weaknesses.

For example, in the LibriSpeech ASR test, Kimi-Audio achieves a Word Error Rate (WER) of only 1.28%, significantly outperforming other models. In the VocalSound test, Kimi-Audio reaches 94.85%, nearly perfect.

In the MMAU task, Kimi-Audio secures the highest scores in two categories. In VoiceBench, which evaluates the speech understanding capabilities of dialogue assistants, Kimi-Audio achieves the highest scores across all subtasks, including one perfect score.

The development team created an evaluation toolkit to fairly and comprehensively assess audio LLMs across multiple benchmark tasks. The performance of five audio models (Kimi-Audio, Qwen2-Audio, Baichuan-Audio, StepAudio, and Qwen2.5-Omni) was compared across various audio benchmarks. The purple line (Kimi-Audio) essentially covers the outermost layer, indicating its superior overall performance.