Kimi Linear: 6.3× Faster 1M-Token Decoding, 75% Less KV Cache

Kimi Linear: 6× faster, 75% leaner attention—rewriting Transformer limits.

“AI Disruption” Publication 8000 Subscriptions 20% Discount Offer Link.

The Era of Transformers is Being Rewritten

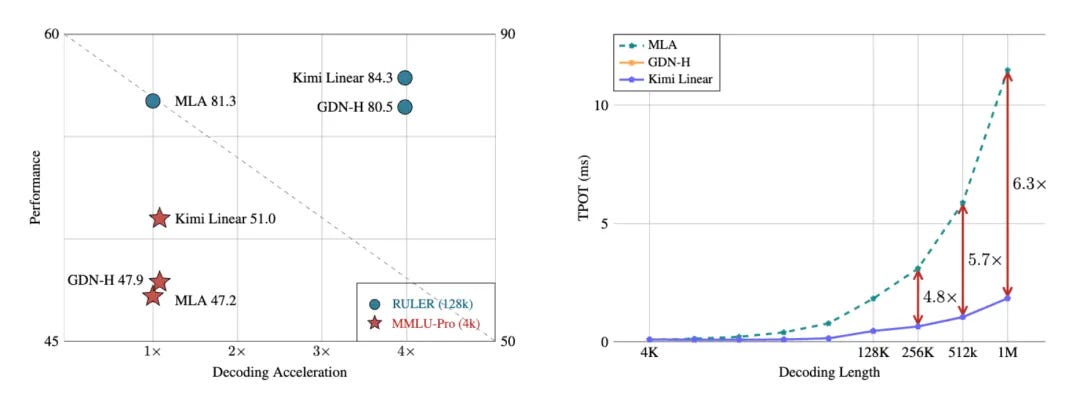

Moonshot AI’s latest open-source Kimi Linear architecture uses an entirely new attention mechanism that, for the first time under identical training conditions, has surpassed full attention models.

In long-context tasks, it not only reduces KV cache requirements by 75%, but also achieves up to 6x inference acceleration.

When will the Kimi K2.5 based on this architecture arrive??

But first, let’s look at how Kimi Linear challenges traditional Transformers.