Karpathy’s 2025 Viral Wrap: AI’s 6 Make-or-Break Moments

Karpathy 2025 AI recap: 6 game-changers from RLVR to vibe coding—why models got spiky and coding went free.

“AI Disruption” Publication 8400 Subscriptions 20% Discount Offer Link.

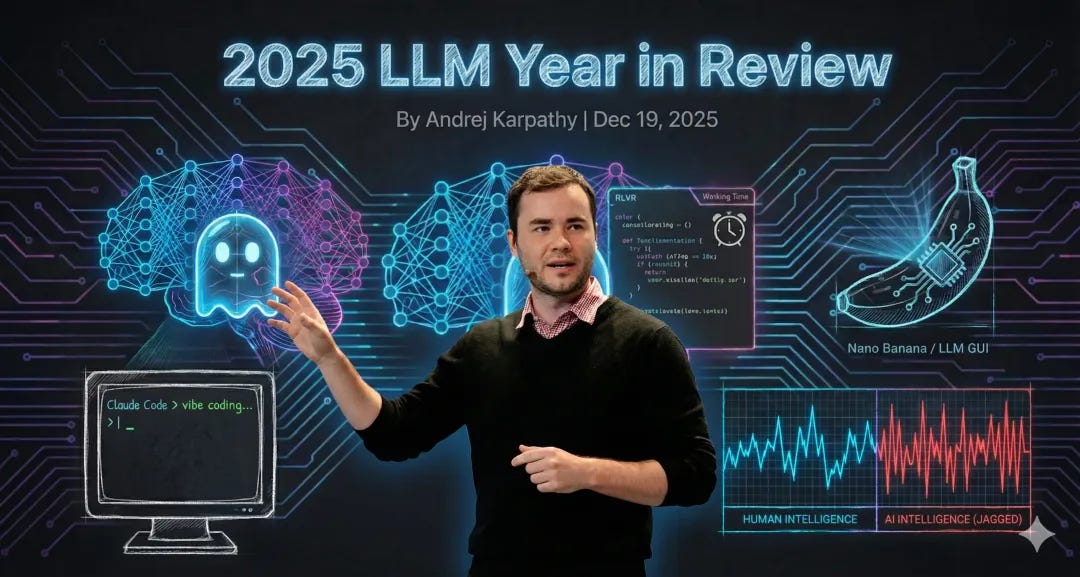

Various year-end reviews have been rolling out recently, and Andrej Karpathy, former co-founder of OpenAI, has delivered his annual summary on large language models.

Earlier this year, his speech at YC went viral across the internet, proposing several novel perspectives:

Software 3.0 has arrived: From initially writing code manually (1.0), to feeding data to train models (2.0), we’ve now entered the 3.0 era of directly “casting spells” on models through prompts.

LLMs are the new operating system: They function as a complex OS responsible for scheduling memory (Context Window) and CPU (inference compute).

The decade of Agents: Don’t expect AI Agents to mature in a year. Going from 99% to 99.999% reliability will take us a decade.

2025 is both exciting and somewhat overwhelming.

LLMs are emerging as a new form of intelligence that is simultaneously far smarter than I expected and far dumber than I expected.

Regardless, they are extremely useful. I believe that even at their current capability level, the industry hasn’t tapped into even 10% of their potential. Meanwhile, there are still too many ideas to try, and conceptually, this field still feels vast.

As I mentioned earlier this year, I simultaneously (somewhat paradoxically) believe: we will see continued rapid progress, but there’s still a massive amount of hard work ahead.

Buckle up, we’re about to take off.