Google's 0.3B Model: Offline Phone Use, Only 0.2GB Memory

Google's EmbeddingGemma: 0.3B open model for offline AI. Runs on <200MB RAM, tops MTEB benchmarks for RAG & semantic search on devices.

"AI Disruption" Publication 7600 Subscriptions 20% Discount Offer Link.

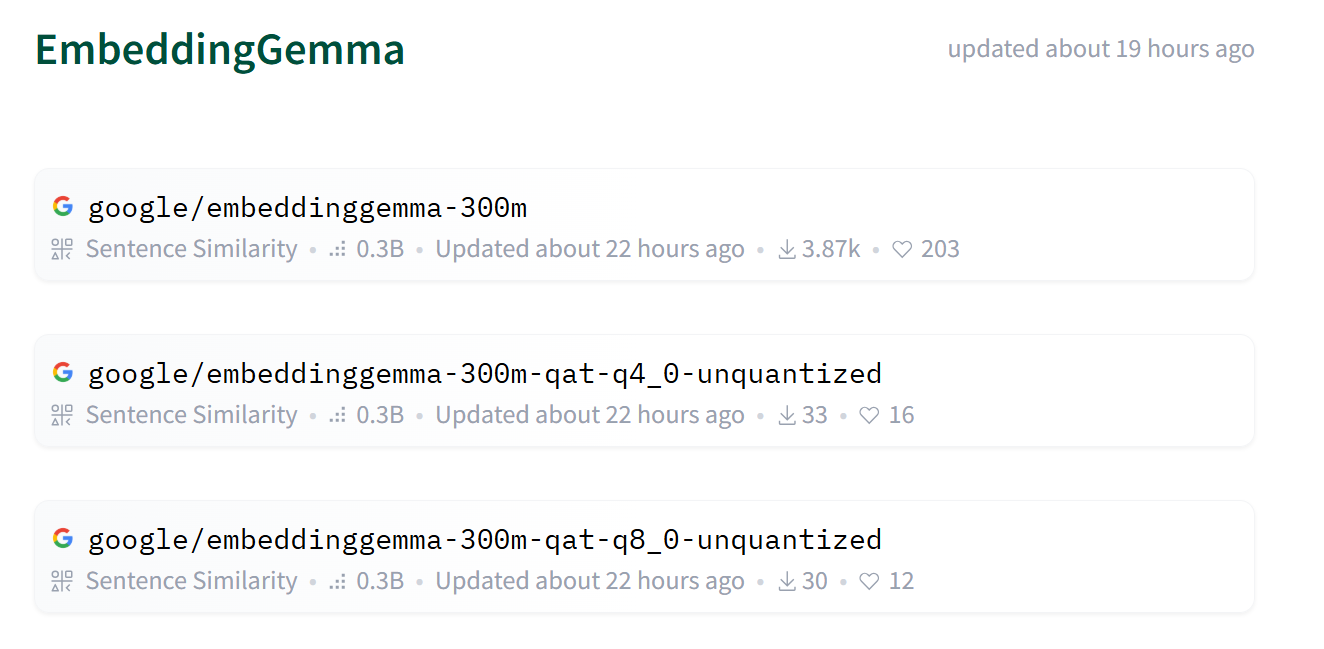

Today, Google open-sourced a brand new open embedding model called EmbeddingGemma.

This model achieves remarkable results with minimal resources, featuring 308 million parameters and specifically designed for edge AI, supporting deployment of Retrieval-Augmented Generation (RAG), semantic search, and other applications on devices like laptops and smartphones.

A major characteristic of EmbeddingGemma is its ability to generate high-quality embedding vectors with excellent privacy protection, operating normally even without internet connectivity, while achieving performance that rivals the double-sized Qwen-Embedding-0.6B.

According to Google, EmbeddingGemma has the following key highlights: