Google Open-Sources Gemma 3n: 2GB RAM, Top Sub-10B Multimodal AI

Google unveils Gemma 3n, an open-source multimodal AI model that runs on just 2GB RAM while delivering cloud-level performance on edge devices like phones and tablets.

"AI Disruption" Publication 7000 Subscriptions 20% Discount Offer Link.

Edge devices have welcomed AI models with new architectures.

This Friday, Google officially released and open-sourced the new edge-side multimodal large model Gemma 3n.

Google states that Gemma 3n represents a significant advancement in on-device AI, bringing powerful multimodal capabilities to edge devices such as phones, tablets, and laptops, with performance that was only available on advanced cloud models last year.

Gemma 3n's characteristics include the following aspects:

Multimodal Design: Gemma 3n natively supports image, audio, video, and text input with text output.

Optimized for Edge Devices: Gemma 3n models are designed with efficiency as the focus, offering two variants based on effective parameter sizes: E2B and E4B. While their raw parameter counts are 5B and 8B, respectively, architectural innovations make their runtime memory footprint comparable to traditional 2B and 4B models, requiring only 2GB (E2B) and 3GB (E4B) of memory to run.

Architectural Breakthrough: At the core of Gemma 3n are novel components, such as the MatFormer architecture for computational flexibility, Per-Layer Embeddings (PLE) for improved memory efficiency, and new audio and MobileNet-v5-based vision encoders optimized for device use cases.

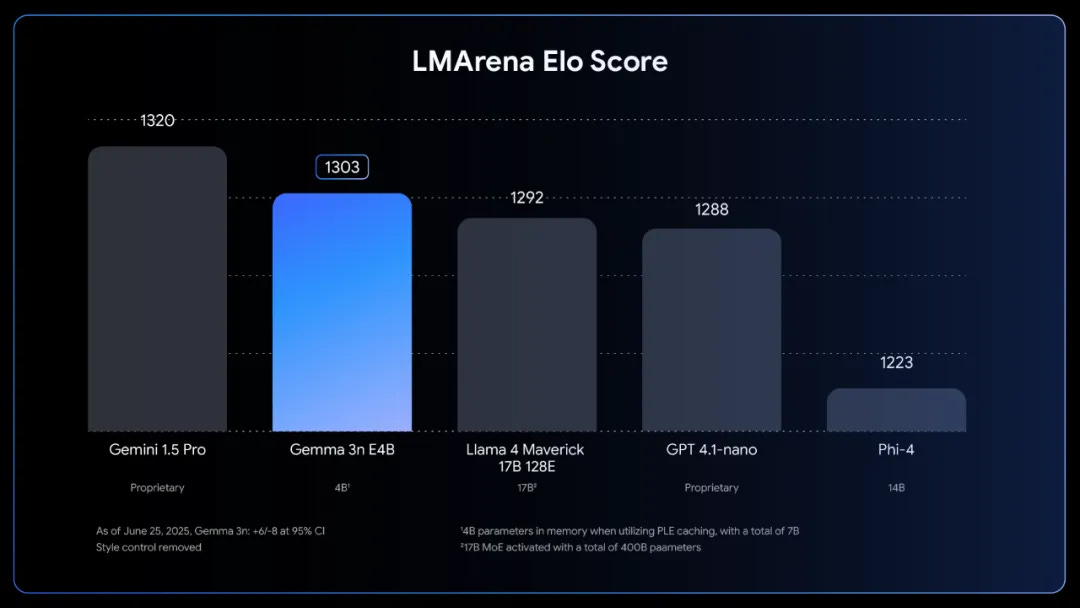

Quality Improvements: Gemma 3n achieves quality improvements in multilingual capabilities (supporting 140 text languages and multimodal understanding in 35 languages), mathematics, coding, and reasoning. The E4B version achieves an LMArena score exceeding 1300, making it the first model under 10 billion parameters to reach this benchmark.

Google states that achieving a leap in device performance requires completely rethinking the model. Gemma 3n's unique mobile-first architecture is its foundation, and it all begins with MatFormer.