From Cheating Panic to an AI‑Smart English Language Arts Classroom

A field guide for faculty who’d rather harness student AI use than hunt it: three ground rules, classroom‑ready activities, and one experiment to run before the fall term.

"AI Disruption" Publication 7100 Subscriptions 20% Discount Offer Link.

This is the 19th guest post for "AI Disruption."

Dave Hallmon has been a long-time adjunct professor for nearly two decades. He often splits his days between lecture halls at two universities and project rooms in the corporate and government sectors. He aspires to bring real-world problems straight into his computer science, information systems, and management courses.

Lately, that “real world” has meant Generative AI: from prompting strategies that rescue stuck coders to ethics drills that test large-language models for bias, my classrooms double as living labs where students learn to critique, iterate, and build alongside the machines that will shape their careers.

While new to Substack, visit Dave’s new Medium publication, TheNextClassroom_ which is a gathering place for educators swapping victories and face-plants as they fold Gen AI into everyday teaching. Join him (and other teachers like you) to trade lesson plans, cautionary tales, and big-picture questions about what it means to teach, learn, and lead in an AI-shaped world.

Until then, I’m honored to guest-host him here at AI Disruption and for some practical Gen AI field notes from the front-line classrooms.

Remember the hush that falls when a timed essay begins—just keyboards and quiet panic? That moment is over. By the time your syllabus loads, half the class has already asked ChatGPT to outline the term paper. The real risk isn’t plagiarism; it’s letting the machine do the thinking while we keep grading like it’s 2015. This guide flips the script: if students will write with AI anyway, let’s make the process visible, the product accountable, and the learning unmistakably human.

The Panic Is Real—and Measurable

New Yorker captured the mood on campuses in spring 2025: “What Happens After A.I. Destroys College Writing?” Professors confessed they were yanking students back to blue books and oral exams, stunned by how seamlessly ChatGPT could ghostwrite a paper. The fear isn’t abstract—it’s numeric. A February 2025 HEPI/Kortext survey of 1,041 undergrads found that 92% now use generative AI tools, and 88% have dropped bot-written text straight into graded work—a jump of more than thirty points in a single year.

Put bluntly: the copy‑and‑paste panic you feel is justified. But here’s the twist the numbers also reveal: students aren’t just cheating; they’re studying. Many say they lean on AI to unpack dense readings, brainstorm angles, or test‑drive thesis statements. Cheating and learning have collapsed into the same click.

So, where do we go from here? First, we ditch the whack‑a‑mole mindset. Instead of asking How do I spot the fakes? we ask How do I teach the process so the product can’t be faked?

That shift begins with three ground rules—Transparent, Responsible, and Efficient—that turn generative AI from a back‑alley shortcut into an above‑board writing partner.

Three Ground Rules for an AI-Smart Classroom

So, how do we channel the chaos? Start with a trio of principles that make every GenAI interaction visible, accountable, and worth the tuition check.

1️⃣ Transparent

Require a running prompt journal (Google Docs with version history works) where students paste every question, every raw blob, and a quick note on what they kept, cut, or rewrote. Post your journal first—warts and all. Once you model your “oops” moments, students relax and the moral panic dial turns down.

2️⃣ Responsible

Large‑language models are eloquent fabulists. Make fact‑checking part of the grade. Students vet claims against two solid sources, then add a one‑paragraph truth memo listing what checked out, what didn’t, and how they fixed it. Integrity moves from policy PDF to daily habit.

3️⃣ Efficient

First drafts from ChatGPT are usually oatmeal—quick carbs, no flavor. Teach students to reroll prompts at least ten times, compare versions, and steal only the lines that spark. Pair that with a sensory switch‑up mini‑game (replace three bland nouns with sights, sounds, or smells) and they’ll see how iteration plus human craft beats every one‑click essay mill.

Flex or Cheat? Busting the Big Myths

Every semester, I hear the same worries in the copy room:

“If a student even opens ChatGPT, that’s plagiarism.”

“The detectors will catch everything anyway.”

“Letting AI in the door will rot their critical-thinking skills.”

The trouble is, those claims are half-true at best and paralyze good pedagogy.

Bottom line? AI can be an academic shortcut or a strength‑training machine. The difference lies in whether we grade the invisible process or the shiny final paragraph.

Four Small Policies, Big Payoff

You don’t need a new LMS or an AI firewall—just a few guardrails that nudge students from “secret shortcut” to “visible craft.” Drop these on the syllabus and you’ll feel the culture shift by week two.

Google Docs + Gemini: Keep the receipts. Each student’s prompt journal lives in a single Doc with version history turned on. Mid‑term, you can scrub back like game film: bland first draft → metaphor pops. The timeline doubles as plagiarism insurance.

Classroom Prompt Library. Spin up a shared Doc titled “ENG 202 Prompt Pantry,” seed it with starters, and let students tag and refine it. Prompt writing becomes a literary craft, not a cheat code.

Grade the Revisions, Not Just the Result. Tie a chunk of the grade to revision notes captured in the prompt journal: “Swapped passive voice after Gemini suggestion,” “Cut para 2—hallucinated stats.”

PII ≠ Prompt Input (Privacy First). No real names, grades, or sensitive details ever go into consumer LLMs. Students learn a habit the industry is beginning to mandate: never feed the model what you wouldn’t print on a postcard.

These four tweaks turn GenAI from backstage accomplice to spotlighted collaborator.

Draft with the Machine, Polish with Your Voice

Forget the blank-page stare-down; the first draft now takes minutes. The real artistry starts the moment you push back.

The Two‑Pass Draft

Machine Assist Students feed a thesis and outline into ChatGPT; keep Track Changes on, accepting or rejecting lines live. Quick debrief: Which sentence rang hollow? Which metaphor felt worth saving?

Human Rewrite Laptops to airplane mode. Students rewrite from scratch, lifting only phrases that still feel alive. Structure sticks, language freshens, and ownership returns.

The Sensory Switch‑Up Mini‑Game

Replace three AI nouns with sights, sounds, or smells. “The city was loud” → “Buses hissed while corner vendors sang of coffee.” Humor follows, and so does voice.

AI as Copy Editor

After the human draft is locked, run ChatGPT for cliché alerts; students decide what to fix, reinforcing agency while getting one last mechanical polish.

Catch the Hallucinations: An AI-Fact-Check Lesson

Large‑language models sound right even when hopelessly wrong—Shakespeare “invented 3,000 words”; Einstein “failed math.” This lesson turns that blind spot into intellectual Whac‑a‑Mole.

How it plays out, Flash ChatGPT’s polished paragraph claiming Shakespeare coined 3,000 words, complete with a studious bibliography. Students vote True / Half‑True / Fabricated on sticky notes. Pairs then raid the OED or Britannica to verify. Groans follow as half the AI sources dissolve.

Debrief: Believability isn’t truth. Citation lists can lie. Human triangulation still rules.

Assess What Matters: From Pages to Portfolios

If AI can draft a term paper in 90 seconds, grading that paper for originality is like timing a microwaved marathon. Shift the spotlight to what the AI can’t fabricate: the thinking trail.

Build a Living Portfolio

Goal: Ask students to submit three artifacts for every major assignment:

Prompt Journal Excerpt – the raw chat exchange plus margin notes (“Kept metaphor,” “Flagged hallucination”).

Process Memo – 250 words on why they accepted, rejected, or rewrote key passages; which sources verified the facts; and where the model fell short.

Polished Draft – the final essay, story, or analysis with the usual MLA polish.

This trio turns assessment into a 3-D snapshot: idea genesis, iterative craft, and finished prose. Plagiarists hate it; genuine learners shine.

Simple Rubric

A simple rubric keeps sanity intact:

Because the journal and memo anchor 60 percent of the grade, students quickly realize AI can’t ghost-write self-reflection. The result? More time wrestling with ideas, and less time Googling “best plagiarism detector.”

Design Assignments That Need AI (and Still Test the Mind)

You’ve set the guardrails and collected the portfolios—now give students prompts that actually reward smart AI use instead of punishing curiosity. Think of the assignment as a circuit board: the machine handles the voltage, and the human routes the current into something meaningful.

Assignment Blueprint: “Dialogue With a Dead Author”

Goal: Practice close reading, argumentation, and responsible AI prompting.

Seed Prompt – Students feed ChatGPT a passage from Frankenstein and ask it to respond “in the voice of Mary Shelley” to a critical claim (e.g., “The monster is a stand-in for the Industrial Revolution”).

Interrogate the Voice – They reroll at least five times, tweaking temperature or angles, and annotate which attempts to feel plausible, which slip into 21st-century slang, which hallucinates context.

Human Counter-Response – Airplane mode on. Students write a rebuttal in their own voice, citing scholarly sources that either support or dismantle the AI-Shelley’s position.

Reflection – 150-word memo: What made the AI portrayal convincing or cringeworthy? How did multiple rerolls refine your thesis?

By design, the task is impossible to plagiarize wholesale—the grade hinges on how students wield the tool, not on the AI’s pastiche.

Teach Attribution & AI Literacy Inside the Task

Goal: Instead of a bolted-on citation lecture, bake literacy steps into the workflow:

Source Triangulation – Require one primary text citation, one peer-reviewed article, and one AI-generated idea that the student can defend.

AI Disclosure Line – Students prepend the reflection with: “Portions of this draft were generated with ChatGPT; see prompt journal dated 10/3 for details.”

Mini-Checkpoint – Mid-draft, conduct a five-minute “citation audit” where peers click every link in a partner’s notes. Dead links? Fabricated journals? They flag them on the spot. The audit moment isn’t punitive; it normalizes vetting AI-drafted evidence.

Integrity by Design: Boundaries, Loops, Accountability

Goal: Make academic honesty an inside job:

Boundaries – “Use AI for idea generation, structure, and style suggestions. Do not paste AI text into your final without rewriting.”

Feedback Loop – The reflection asks, “Where did the AI mislead you? How did you correct it?” Grading criteria award points for spotting and fixing errors.

Accountability Artifact – The prompt journal + reflection go in the portfolio. A cheater would have to fabricate an entire decision trail—far harder than just doing the work.

Two Stretch Moves for the Adventurous

Ready to nudge the ceiling after the guardrails are in place? These micro-experiments push students to wrestle with AI in ways that sharpen meta-skills without adding marking overload. Try them as extra credit or a one-week lab.

Reverse-Prompt Challenge

Goal: Teach students to unpack genre conventions and stylistic fingerprints.

Give the class a mystery paragraph—one you secretly generated by feeding ChatGPT a prompt like, “Write a 120-word horror passage in the style of Edgar Allan Poe.”

Task: Working in pairs, students must write the prompt they think would have produced that exact output. They can iterate with ChatGPT as many times as they like, tweaking until their reproduction matches the target within, say, ten words.

Reflection: Each pair explains which lexical clues (rhythm, archaic diction, macabre imagery) signaled Poe versus, say, Lovecraft.

Sonnets, Remixed

Goal: Marry close reading with iterative rewriting and voice ownership.

Stage 1 – AI Scaffold: Students ask Gemini to draft a sonnet on a theme of their choice—say, climate anxiety.

Stage 2 – Human Rewrite: With the scaffold onscreen, they keep the volta and line count but replace every AI descriptor with sensory specifics pulled from personal experience.

Stage 3 – Peer Swap: Partners highlight where the poem still “sounds like an AI” and suggest concrete revisions.

Stage 4 – Author’s Note: A 100-word statement that details which lines originated with AI, which were re-envisioned, and how the process altered their understanding of sonnet form.

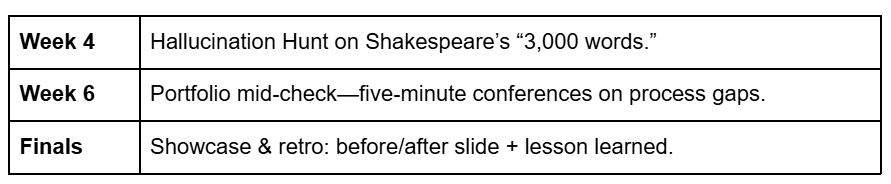

The Fall 2025 Roadmap: Pilot in Six Moves

In summary, consider the following in preparation for your Fall 2025 classrooms.

Join the Conversation

If generative AI is the asteroid, education doesn’t have to be the dinosaurs. Every tweak here—prompt journals, hallucination hunts, sensory rewrites—aims at the same prize: teaching students to think with technology instead of outsourcing thought to it.

Closing dare—try one small change this term—rewrite a single assignment so the prompt, the rerolls, and the fact‑check memo matter as much as the final paragraph. Audit what happens, ask students what felt different, iterate, and share the results.

Bibliography

Kos'myna, N. (2025, June 25). Is Using ChatGPT to Write Your Essay Bad for Your Brain? New MIT Study Explained. MIT Media Lab.

Mollic, E. (2025, June 23). Using AI Right Now: A Quick Guide. One Useful Thing. Substack.

Chow, A. (2025, June 23). ChatGPT May Be Eroding Critical Thinking Skills, According to a New MIT Study. Time.

Hsu, H. (2025, June 30). What Happens After A.I. Destroys College Writing? The New Yorker.

Freeman, J. (2025, February 26). Student Generative AI Survey 2025. HEPI.

Turnitin. (2024, April 9). Turnitin marks one year anniversary of its AI writing detector with millions of papers reviewed globally.

ISTE. (2024). Evolving Teacher Education in an AI World.

U.S. Department of Education. (2023, May). Artificial Intelligence and the Future of Teaching and Learning: Insights and Recommendations.

Google. (n.d.). Creating Helpful, Reliable, People-First Content.

Google (n.d.). Guidance on Using Generative AI Content on Your Website.