DeepSeek-V3-Base Open Source: Beats Claude 3.5, Programming Boosts by 31%

DeepSeek-V3-Base, a 685B-parameter MoE model, outperforms Claude 3.5 with 31% programming boost. Explore this open-source AI revolution now!

At the end of 2024, DeepSeek AI, a company exploring the essence of Artificial General Intelligence (AGI), open-sourced its latest mixture-of-experts (MoE) language model, DeepSeek-V3-Base. However, a detailed model card has not yet been released.

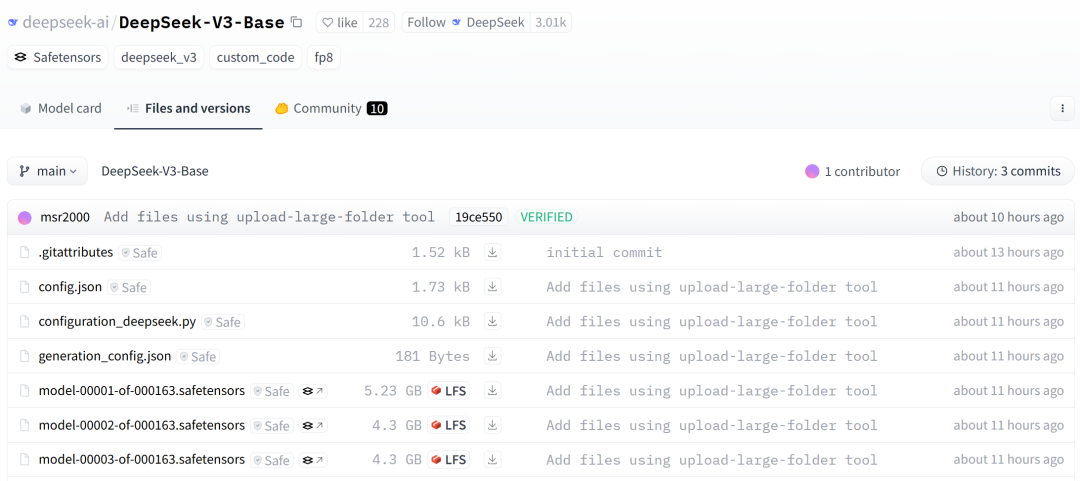

HuggingFace Download Link: https://huggingface.co/DeepSeek-ai/DeepSeek-V3-Base/tree/main

Specifically, DeepSeek-V3-Base features a 685B-parameter MoE architecture with 256 experts, utilizing a sigmoid routing mechanism and selecting the top 8 experts for each input (topk=8).

Despite having numerous experts, only a small subset is active for any given input, making the model highly sparse.