DeepSeek-R1: Pure RL Cuts Costs by 90%, Milestone in AI Learning

Discover DeepSeek-R1: A game-changing AI model with OpenAI-level capabilities, open-sourced for free, sharing training secrets to pave the way for AGI.

"AI Disruption" publication New Year 30% discount link.

OpenAI's Original Vision, Ultimately Realized by a Startup?

On January 19, DeepSeek began releasing the R1 preview version.

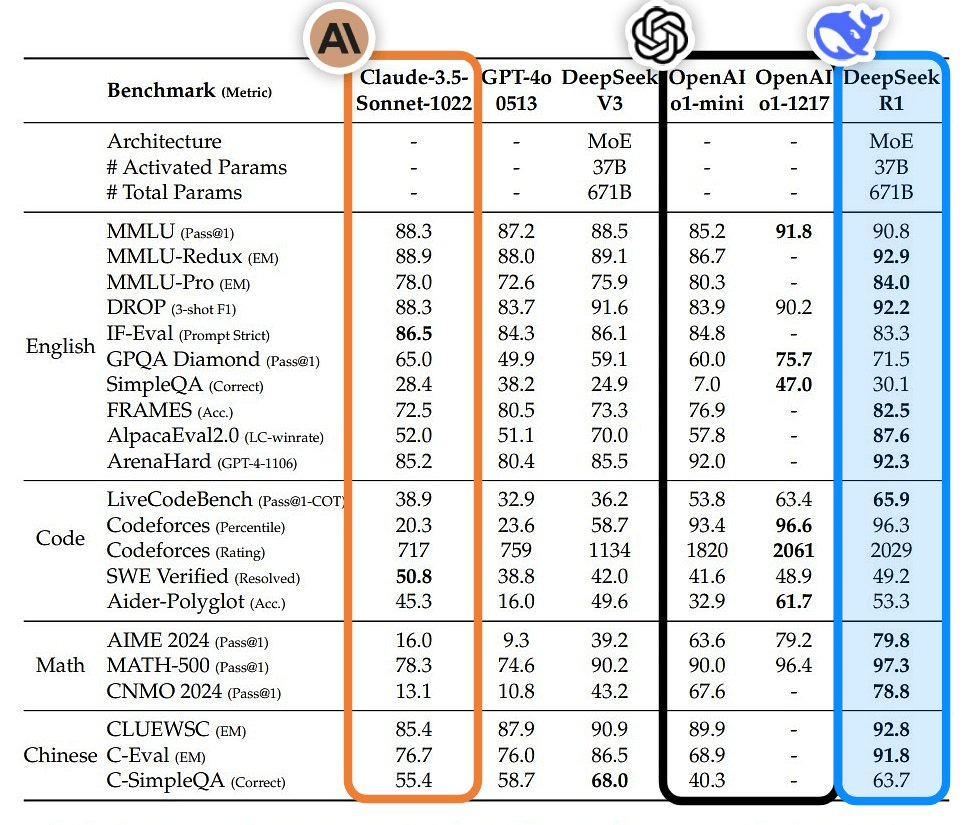

Last night, DeepSeek officially launched DeepSeek-R1, which, like OpenAI's O1, excels in tasks like mathematics, code, and natural language reasoning.

The open-source large model DeepSeek-V3, released in December last year, had already stirred up a buzz by achieving many "impossibilities."

This time, the open-source R1 large model has stunned many AI researchers from the very beginning, with people speculating how it was accomplished.

Casper Hansen, author of AutoAWQ, stated that DeepSeek-R1 uses a multi-stage cyclic training method: Foundation → RL → Fine-tuning → RL → Fine-tuning → RL.

Professor Alex Dimakis from UC Berkeley believes that DeepSeek is now in the lead, and U.S. companies may need to catch up.

Currently, DeepSeek has fully launched R1 on the web, app, and API platforms. The following image shows the web interface, where selecting DeepSeek-R1 provides immediate access.

Experience it here: https://www.deepseek.com/