DeepSeek R1-0528 Quietly Open-Sourced – Matches o3 in Tests

DeepSeek R1-0528 AI model quietly open-sourced! 685B params, rivals O3 & Claude 4. Free, MIT-licensed – test results inside

"AI Disruption" Publication 6700 Subscriptions 20% Discount Offer Link.

Beyond Everyone's Expectations.

After much anticipation, DeepSeek has finally released its reasoning model update.

Last night, DeepSeek officially announced that its R1 reasoning model has been upgraded to the latest version (0528), and the model and weights were made public early this morning.

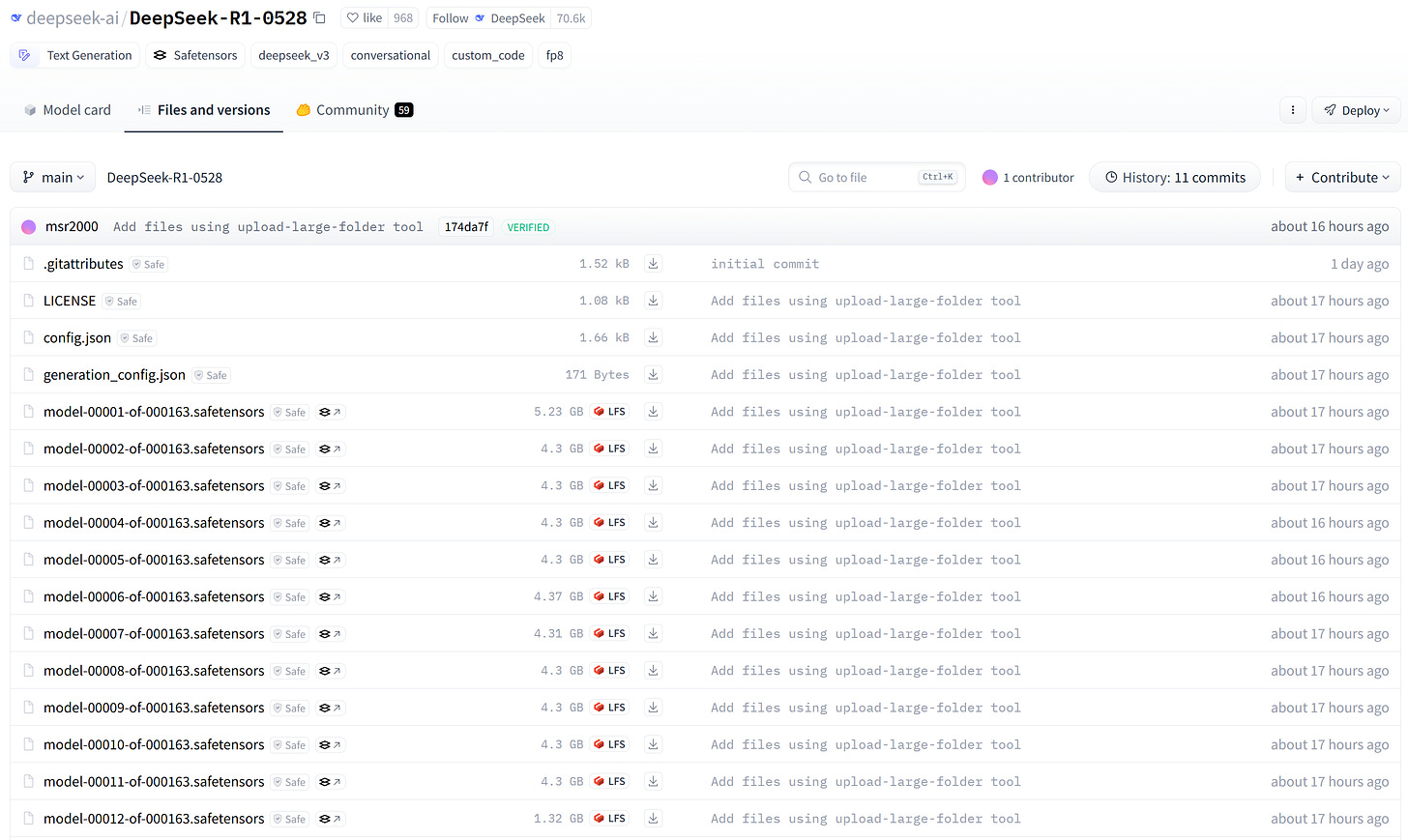

HuggingFace link: https://huggingface.co/deepseek-ai/DeepSeek-R1-0528

The model files were uploaded at 1 AM, making one wonder if DeepSeek engineers worked overtime until the very last moment. Some netizens also noted that once again, they released a new model right before China's Dragon Boat Festival holiday, proving more reliable than vacation announcements.

This upgraded R1 version has a massive 685 billion parameters. While it's open-sourced, most people can only observe from the sidelines. If the "full-blood version" isn't distilled, it definitely cannot run locally on consumer-grade hardware.

However, from initial feedback, this model is quite powerful, showing improvements over the original DeepSeek in long-term reasoning, intelligence level, and real-world applications.

Looking at its configuration in more detail, here's what we know:

Core remains the "Chain of Thought" reasoning, showing logical processes step by step, relatively transparent

Main focus unchanged: mathematical problems, programming challenges, scientific research

Architecture evolved but without major changes: still uses Mixture of Experts (MoE) design, 671B total parameters, activating only 37B each time for high efficiency

Training methods improved: used large-scale reinforcement learning + cold start data, addressing previous R1 model issues like infinite repetition and poor readability

However, this attitude of silently releasing links without fanfare has been widely welcomed by netizens.