DeepSeek on Nature: Makes History Where OpenAI Didn't Dare

DeepSeek-R1 on Nature Cover: China's $300K AI Model Makes History, Surpassing OpenAI with Peer-Reviewed Breakthrough.

"AI Disruption" Publication 7700 Subscriptions 20% Discount Offer Link.

Just today, DeepSeek's large language model DeepSeek-R1 research results were featured as the cover article in the internationally top-tier scientific journal "Nature."

Unlike OpenAI's models that cost tens of millions of dollars, this domestically-produced AI model, trained for only $300,000, not only once caused fluctuations in the US stock market, but has now also landed on the latest cover of Nature.

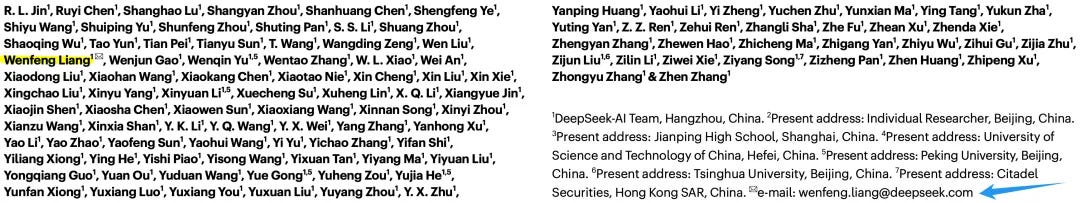

The article featured on Nature's cover is DeepSeek's paper published on arXiv at the beginning of the year: "DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning," which is R1's technical paper.

Although it's largely similar to the paper from early this year, it has been supplemented with considerably more details.

The main text is only 11 pages in double-column format, but the supplementary materials reached 83 pages; and the peer review records - the discussion records between reviewers and the DeepSeek team regarding certain issues in the paper (commonly called "rebuttal") - also span 64 pages.

These newly disclosed materials allow us to see the detailed training process of DeepSeek R1, and the team disclosed for the first time that the key cost for training R1's reasoning capabilities was only $294,000.