DeepSeek OCR: Compress Everything Visually

DeepSeek-OCR: Achieve 10x lossless context compression with our 3B-parameter open-source OCR model for superior text-to-image efficiency.

“AI Disruption” Publication 8000 Subscriptions 20% Discount Offer Link.

We may be able to achieve nearly 10x lossless context compression through text-to-image methods.

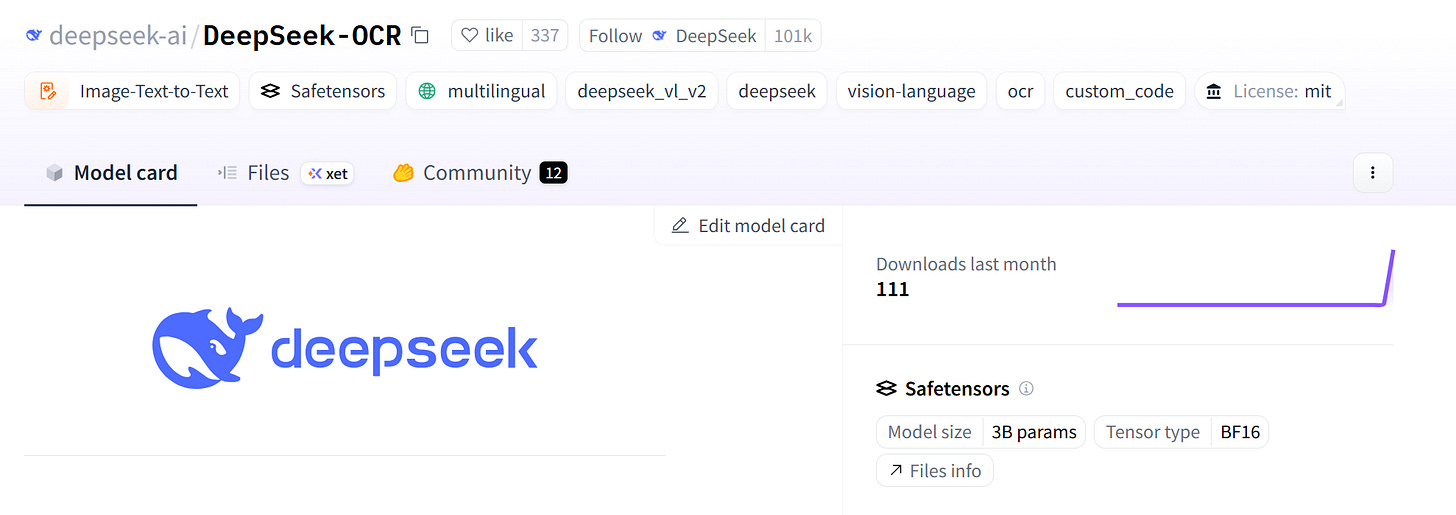

Surprisingly, DeepSeek has just open-sourced a new model—an OCR model. As can be seen, the model has 3B parameters and has already garnered over 100 downloads shortly after its release.

This project was jointly completed by three DeepSeek researchers: Haoran Wei, Yaofeng Sun, and Yukun Li. The first author, Haoran Wei, previously worked at StepFun (Jieye Xingchen) and led the development of the GOT-OCR2.0 system (arXiv:2409.01704), which aimed to achieve “second-generation OCR.” That project has received over 7,800 stars on GitHub. Therefore, it makes perfect sense for him to lead DeepSeek’s OCR project.

DeepSeek states that the DeepSeek-OCR model is a preliminary exploration of the feasibility of compressing long text contexts through optical two-dimensional mapping technology.

The model primarily consists of two core components: DeepEncoder and the DeepSeek3B-MoE-A570M decoder.

Among them, DeepEncoder serves as the core engine, maintaining low activation states under high-resolution input while achieving high compression ratios, thereby generating a moderate number of visual tokens.

Experimental data shows that when the number of text tokens is within 10 times the number of visual tokens (i.e., compression ratio <10×), the model’s decoding (OCR) accuracy reaches 97%; even when the compression ratio reaches 20×, OCR accuracy remains at approximately 60%.