Claude Open Source LLM Thought Visualization Tool

Claude's open-source circuit tracing tool visualizes LLM decision-making, helping researchers decode AI logic & behavior.

"AI Disruption" Publication 6700 Subscriptions 20% Discount Offer Link.

The Claude team is diving into open source—

They’ve launched a “circuit tracing” tool to help everyone understand the “brain circuits” of large models and track their thought processes.

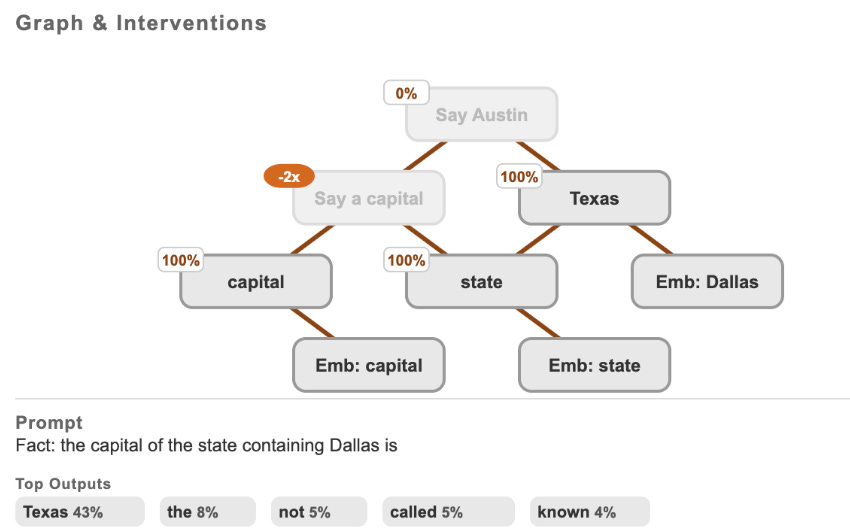

The core of this tool lies in generating attribution graphs, which function similarly to neural network diagrams of the brain, visualizing the internal supernodes of a model and their connections to reveal the pathways through which LLMs process information.

Researchers can intervene in node activation values and observe changes in model behavior to verify the functional roles of each node, decoding the “decision logic” of LLMs.

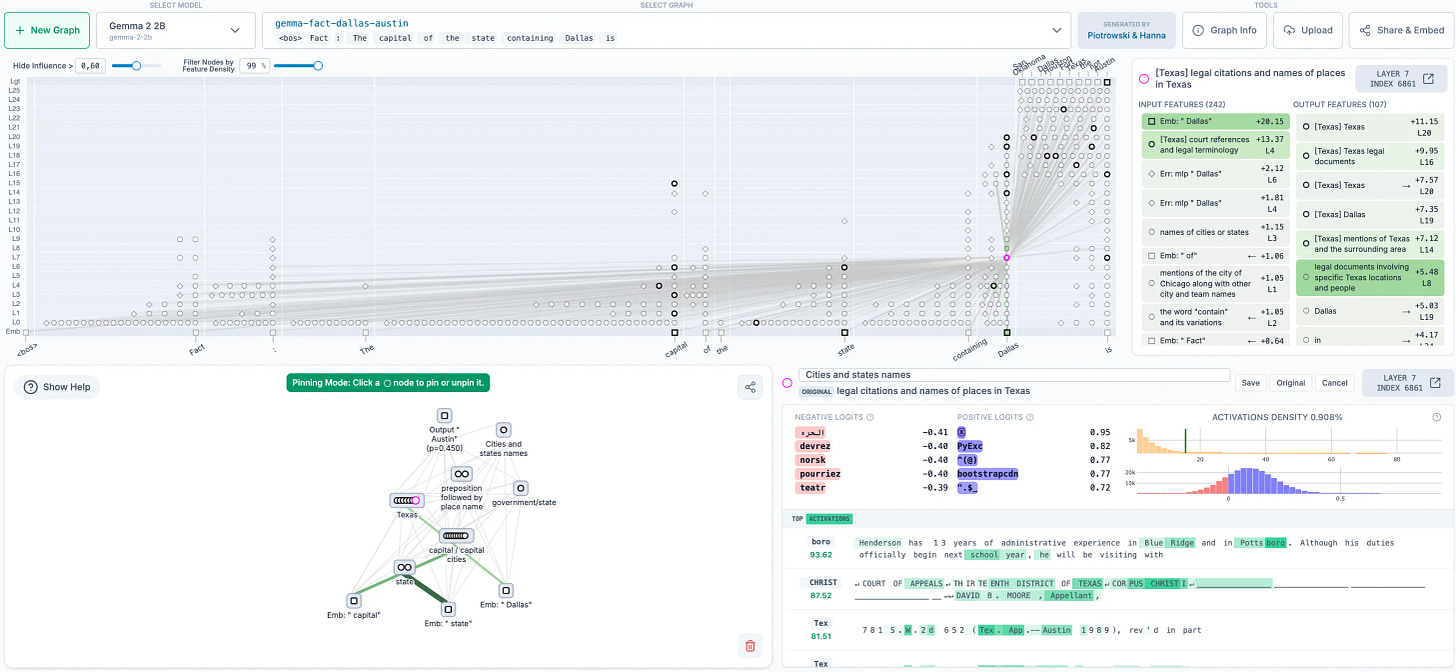

The official statement indicates that the released open-source library supports the rapid generation of attribution graphs on mainstream open-weight models, while the Neuronpedia-hosted frontend interface further allows users to interactively explore.

In summary, researchers can:

Generate their own attribution graphs for circuit tracing on supported models;

Visualize, annotate, and share graphs in an interactive frontend;

Modify feature values and observe changes in model outputs to validate hypotheses.

Anthropic CEO Dario Amodei stated:

Currently, our understanding of the inner workings of AI lags far behind its capabilities. By open-sourcing these tools, we hope to make it easier for the broader community to study the internal mechanisms of language models. We look forward to seeing these tools applied to understanding model behavior and to further improvements and expansions of the tools themselves.