ByteSeed Debuts Open-Source Code Model with SOTA Performance

ByteDance's Seed-Coder: Open-source 8B code model beats Qwen3 & DeepSeek-R1, achieves SOTA with self-curated data.

"AI Disruption" Publication 6400 Subscriptions 20% Discount Offer Link.

ByteDance's Seed Releases Its First Open-Source Code Model!

Seed-Coder, an 8B-scale model, surpasses Qwen3 and achieves multiple SOTA results.

It demonstrates that "with minimal human involvement, LLMs can autonomously manage code training data."

By self-generating and filtering high-quality training data, the model significantly enhances its code generation capabilities.

This can be seen as an extension of DeepSeek-R1's strategy for self-generating and filtering training data.

The model comes in three versions:

Base

Instruct

Reasoning

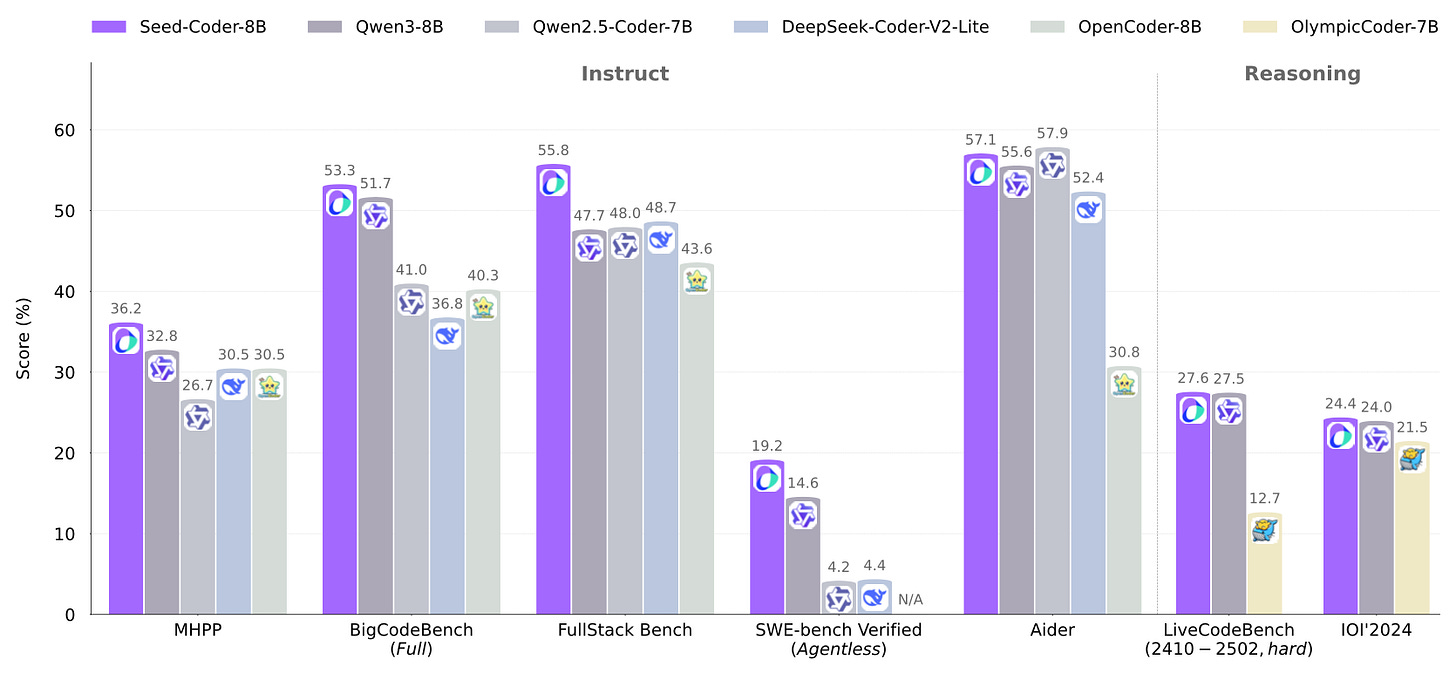

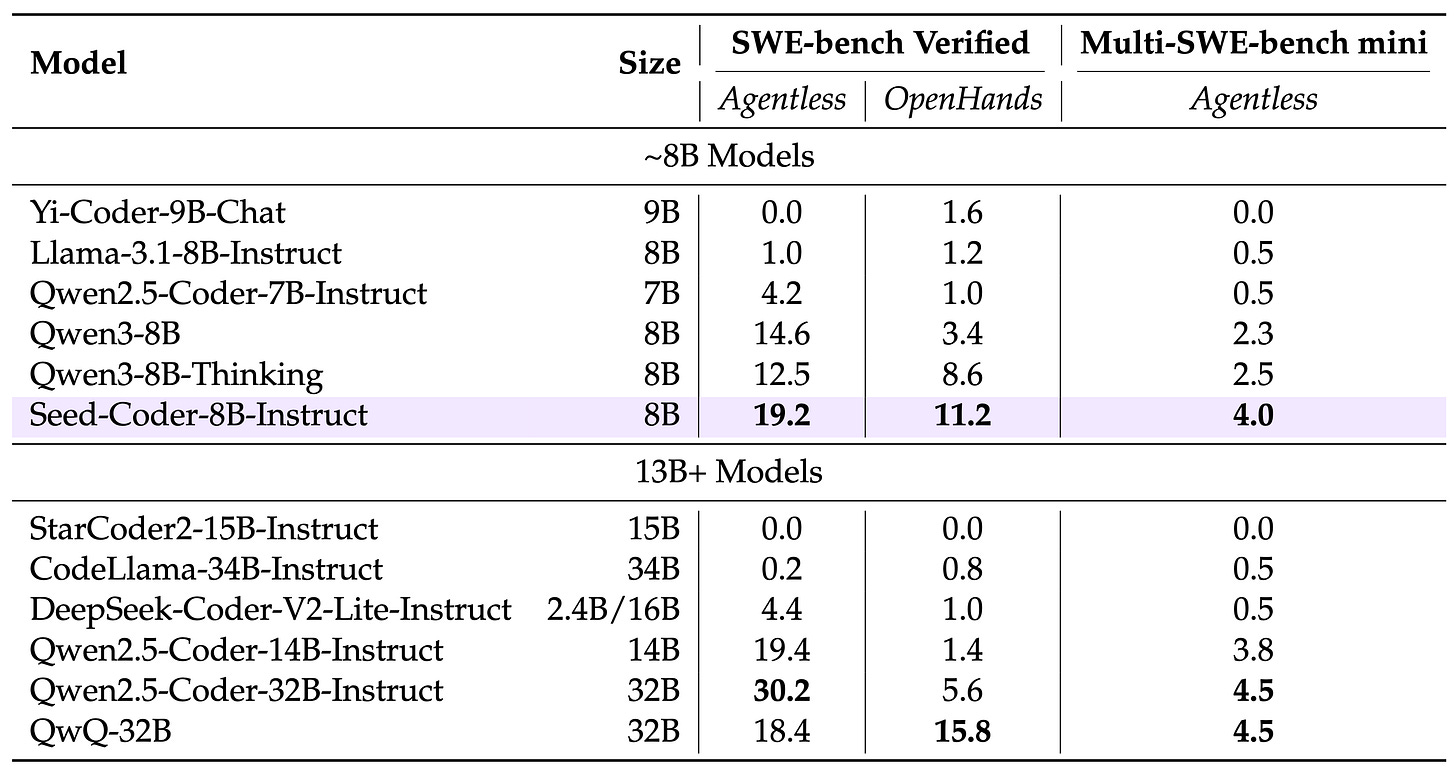

Among them, the Instruct version excels in programming, securing SOTA results on two benchmark tests.

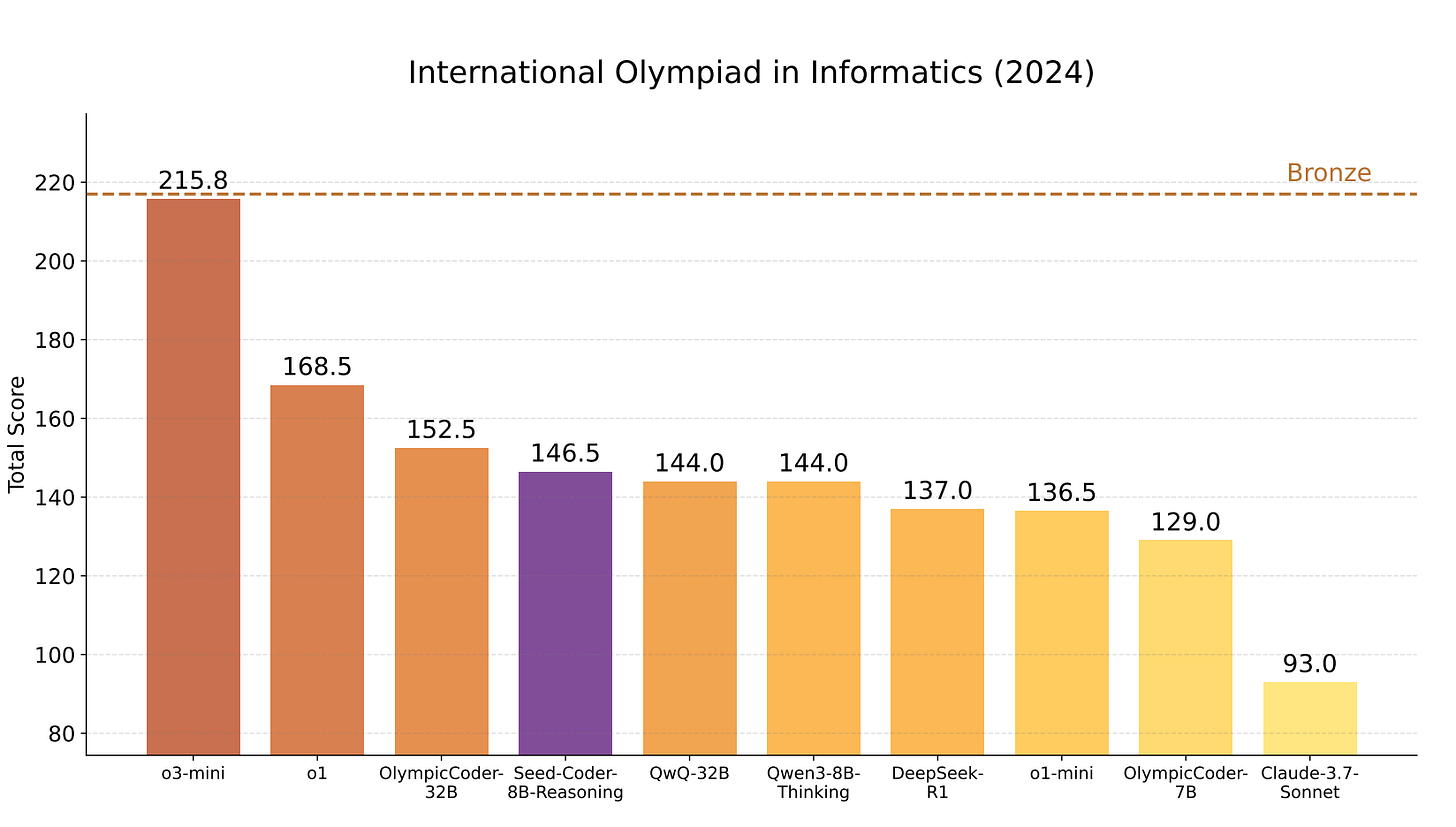

The Reasoning version outperforms QwQ-32B and DeepSeek-R1 on IOI 2024.