Baidu Open-Sources ERNIE 4.5 Series, Outperforms DeepSeek-V3

Baidu open-sources 10 ERNIE 4.5 models with MoE architecture. Features 424B parameters, multimodal capabilities, and outperforms competitors on benchmarks.

"AI Disruption" Publication 7000 Subscriptions 20% Discount Offer Link.

Today, Baidu officially open-sourced the ERNIE 4.5 series models.

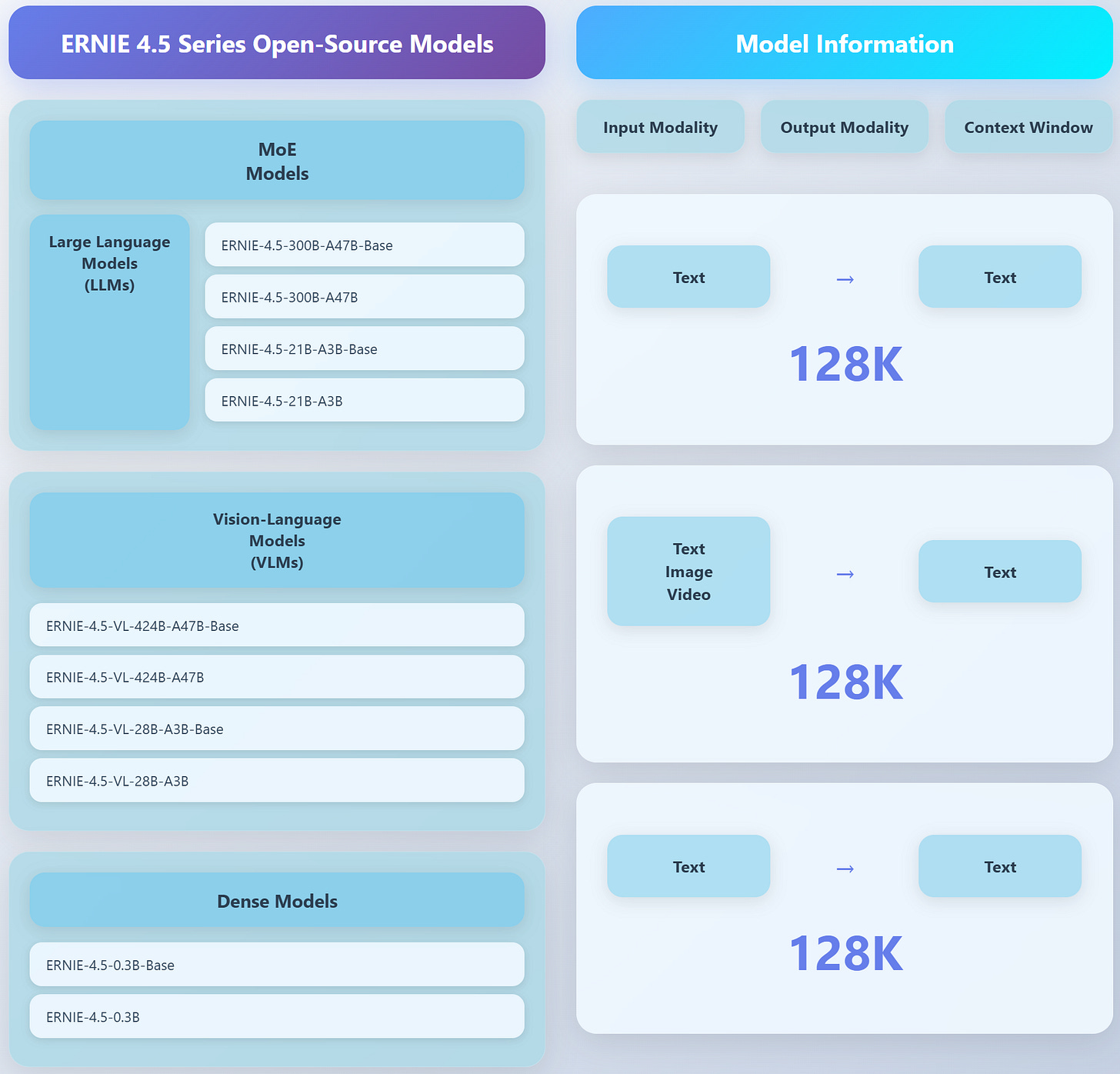

The ERNIE 4.5 series open-source models include 10 models in total, covering Mixture of Experts (MoE) models with activated parameter scales of 47B and 3B, respectively (with the largest model having a total parameter count of 424B), as well as a 0.3B dense parameter model. The pre-training weights and inference code are completely open-sourced.

Currently, the ERNIE 4.5 open-source series can be downloaded and deployed on platforms such as PaddlePaddle Galaxy Community and Hugging Face, while the open-source model API service is also available on Baidu Intelligent Cloud's Qianfan Large Model Platform.