AI Can Train on Purchased Books Without Author Consent, Court Rules

US court rules Anthropic can use legally purchased books to train AI without author consent, citing fair use. Pirated books ruled infringing.

"AI Disruption" Publication 6900 Subscriptions 20% Discount Offer Link.

AI can now use published books as training data without the original authors' consent.

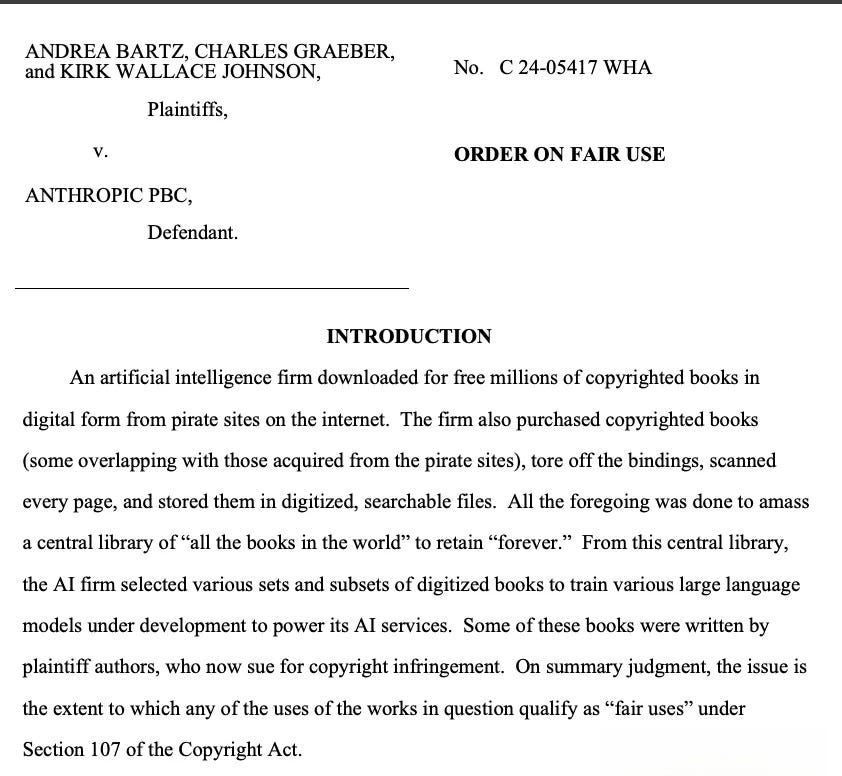

In the latest court ruling, a US court has decided to allow Anthropic, the company behind Claude, to use legally purchased published books to train AI without author permission.

The court referenced the "Fair Use" principle in US copyright law, determining that AI training constitutes "Transformative Use" - meaning the new use of original works does not replace the original market and benefits technological innovation and public interest.

This is the first time a US court has recognized AI companies' right to use books, protecting artificial intelligence companies from restrictions when using copyrighted text to train LLMs:

This significantly reduces copyright risks for AI training data.

Many netizens' perspective on this is: since humans reading and comprehending books is uncontroversial, AI reading and understanding books should also be reasonable.