1K Samples Beat O1, Rivals DeepSeek-R1—Fei-Fei Li's S1 Released

DeepSeek R1 sparked AI breakthroughs, but now s1-32B takes test-time scaling further—outperforming o1 with just 1K samples. Discover how budget forcing enhances AI reasoning.

"AI Disruption" publication New Year 30% discount link.

Tell the Large Model: Think More

This January, DeepSeek R1 ignited the global tech community. Its innovative approach—which dramatically simplifies computational requirements—shook NVIDIA’s trillion-dollar market cap and spurred industry-wide reflection.

On the road to AGI (Artificial General Intelligence), we no longer need to focus solely on scaling up compute power; more efficient new methods open up a wealth of innovative possibilities.

Recently, technology companies and research teams around the world have been trying to replicate DeepSeek. But if someone were to say right now, “I can significantly improve AI’s inference efficiency,” what would you think?

This new method is called s1.

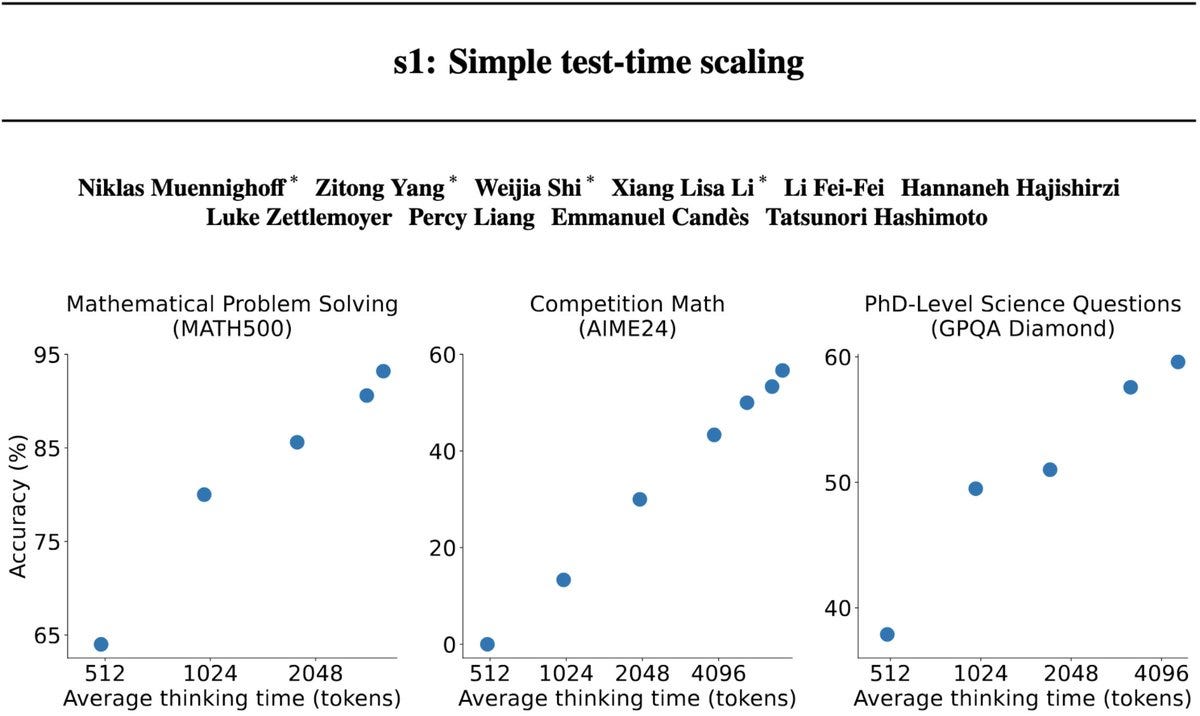

This week, research institutions such as Stanford University and the University of Washington experimented with the simplest implementation of test-time scaling, achieving strong reasoning performance that surpasses o1—despite training the model on only 1,000 questions.

Test-time scaling is a promising new approach in language modeling that leverages additional computation during testing to enhance model performance.

Previously, OpenAI’s o1 model demonstrated this capability, though its method was not publicly shared. Numerous efforts have since been made to replicate o1, involving techniques like Monte Carlo tree search, multi-agent systems, and more.