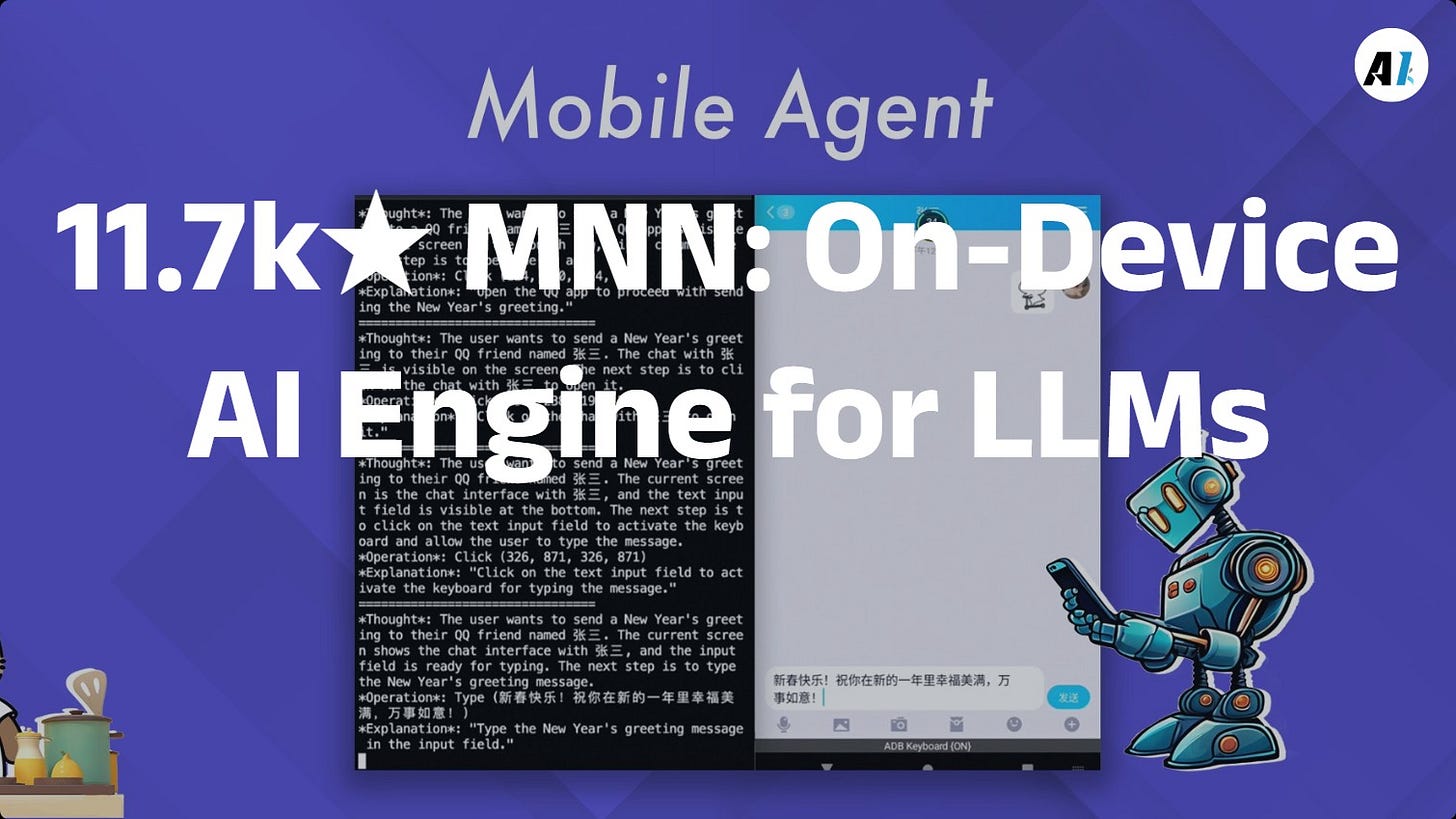

11.7k Star MNN: Mobile AI Acceleration Engine for On-Device LLM Deployment

MNN: Lightweight AI engine for mobile & embedded devices. Optimized for LLM, fast inference, low memory. Used in Alibaba apps.

"AI Disruption" Publication 6800 Subscriptions 20% Discount Offer Link.

MNN is a deep learning framework designed specifically for mobile and embedded devices, renowned for its lightweight nature, versatility, high performance, and ease of use.

It has been widely applied in various Alibaba business scenarios, including Taobao, Tmall, Youku, and DingTalk, covering over 70 use cases such as image recognition, speech processing, and natural language processing.

When deploying deep learning models on mobile devices, developers typically face the following challenges:

Performance Bottlenecks: Limited computational power on mobile devices makes traditional deep learning frameworks run slowly, struggling to meet real-time inference demands, such as face recognition in live streaming or speech-to-text conversion.

Model Size: Deep learning models are often large, consuming significant storage space, while mobile devices have limited storage capacity, making it difficult to accommodate large models.

Compatibility Issues: Model formats vary across different deep learning frameworks (e.g., TensorFlow, Caffe), complicating conversion and deployment processes and increasing development difficulty.

High Development Barrier: Deep learning frameworks typically require developers to have advanced programming skills and specialized knowledge, making it challenging for average developers to get started quickly.

MNN addresses these pain points with optimizations, providing an efficient and user-friendly solution that enables developers to easily implement deep learning applications on mobile devices.